There is a massive difference between a model that works in a sandbox and one that survives production.

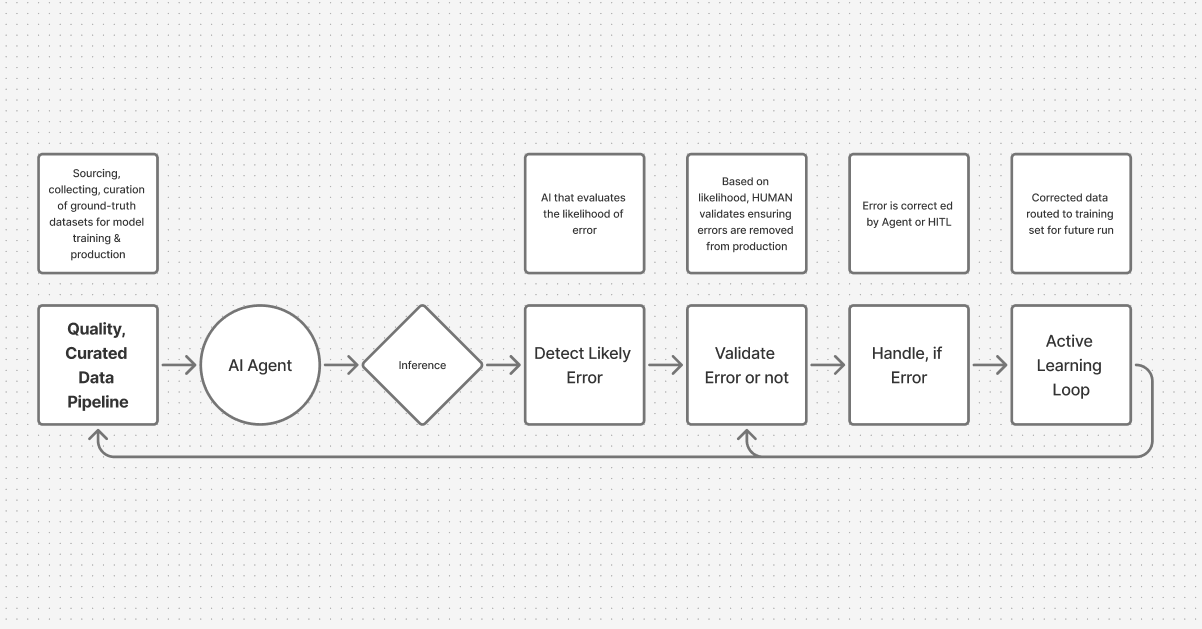

An example of CloudFactory's AI + HITL model

We see it constantly: a team deploys a new computer vision model to automate inspections or claims processing. On paper, the metrics look incredible. But once the model faces the chaotic reality of the physical world—mud on a license plate, a shadow that looks like a dent, or a glare obscuring a serial number—the cracks begin to show.

Suddenly, an impressive 85% accuracy rate stops feeling like a technological breakthrough and starts feeling like a liability.

The remaining 15% isn't just a gap in performance; it is a "Confidence Gap" that creates operational chaos. When an "All-AI" system fails without a safety net, it generates false positives (flagging damage that isn't there) or false negatives (missing critical issues entirely).

Operational leaders are slowly waking up to a hard truth: AI models are probabilistic, not deterministic. They don’t know things; they calculate the likelihood of them. To build a system that is robust enough for the real world, we need to stop treating AI as a replacement for human judgment and start treating it as an engine that requires an orchestration layer.

We call this orchestration Inference Oversight.

The Anatomy of the Confidence Gap

Why do "All-AI" solutions struggle in production? It usually comes down to data drift and edge cases.

In a controlled environment, data is clean. In the real world, photos of equipment might be blurry, taken at odd angles, or obscured by low light. An unmonitored AI model will force an inference on this bad data, often presenting a guess as a fact.

Relying solely on the model means accepting these errors as the cost of doing business. But in high-stakes industries, the cost of errors—missed damage charges, safety hazards, or customer disputes—far outweighs the savings from automation.

To solve this, the goal isn't better models; it is a better process. A workflow is needed that acknowledges the model's limitations and orchestrates a hand-off when certainty drops below a specific threshold.

The Solution: Orchestrating the "Human-in-the-Loop"

Looking at the architecture of a resilient data pipeline, the goal isn't to remove the human, but to elevate them. This is achieved by inserting a logic layer between the AI's inference and the final decision.

Here is how a "Safety Net" workflow operates in a production environment:

1. The Confidence Filter (Detect Likely Error)

The process begins with the AI Agent performing an Inference. However, instead of immediately acting on that output, the system runs a secondary check: Detect Likely Error.

This is the gatekeeper. The system evaluates the statistical likelihood of the AI’s prediction. If the model indicates high certainty that an excavator has a dent, the system automates the next step. But if the confidence score dips—say, to 65% because of a glare on the lens—the system flags it.

2. The Human Validator (Validate Error or Not)

This is where our orchestration of Humans + HITL shines. Low-confidence data isn't discarded; it is routed to a human subject matter expert (SME) or a Human-in-the-Loop (HITL) agent.

The human doesn't review every single data point—that would defeat the purpose of AI. They only review the ambiguous cases, validating whether the error is real, a misjudgement, or a hallucination. This ensures that the data leaving the pipeline is production-grade accurate, regardless of whether it came from the AI or the human.

3. The Active Learning Loop (Turning Failure into Asset)

The true magic happens after the correction. In a standard workflow, a human fixes an error and moves on. In an Orchestrated workflow, that correction is captured.

As shown in our framework, the Handle, if Error step feeds directly into an Active Learning Loop. The corrected data—now labeled with "Ground Truth" accuracy—is routed back to the training set.

This creates a flywheel effect. Every time the AI is unsure and a human corrects it, the model gets smarter. The edge cases that confused the model today become the training examples that perfect it for tomorrow.

Moving From "Black Box" to Transparent Pipeline

The fear of AI usually stems from its "Black Box" nature—inputs go in, and magic comes out. But for enterprise leaders, magic is unscalable.

By implementing an orchestrated pipeline that includes sourcing, curation, error detection, and human validation, the "Black Box" transforms into a glass house. Leaders gain visibility into where the model is struggling and the mechanism to fix it in real-time.

This approach allows businesses to deploy AI aggressively without reckless risk, securing the speed of automation for the easy, say, 80% of tasks, and the certainty of human expertise for the remaining complex 20%.

Case Study: Solving the "Confidence Gap" in Heavy Equipment

Theory is useful, but results are what matter. We recently deployed this exact orchestration framework for a major heavy equipment rental organization.

They faced a massive challenge: accurately assessing damage on returned machinery. "All-AI" solutions were missing subtle damage or flagging dirt as scratches, leading to revenue leakage and customer friction.

They faced a massive challenge: accurately assessing damage on returned machinery. "All-AI" solutions were missing subtle damage or flagging dirt as scratches, leading to revenue leakage and customer friction.

By implementing a pipeline that routes low-confidence damage assessments to human reviewers and feeding those corrections back into the model, the process wasn't just automated—it became more accurate than human review alone.

You can read the full Client Story here.

Scaling with Certainty

It is time to stop gambling on probabilities and start building certainty. For organizations looking to build a data pipeline that balances AI speed with human precision, we are here to help deploy models with confidence.

Contact our team for more to see how we can custom-architect a safety net for production environments.

.png?width=1563&height=1563&name=Untitled%20design%20(38).png)

.png?width=1563&height=1563&name=Untitled%20design%20(30).png)

.png?width=1563&height=1563&name=Untitled%20design%20(33).png)

.png?width=1563&height=1563&name=Untitled%20design%20(34).png)