Within 24 hours of launch, security researchers discovered critical vulnerabilities. The problem isn't Atlas—it's the entire category of autonomous AI agents.

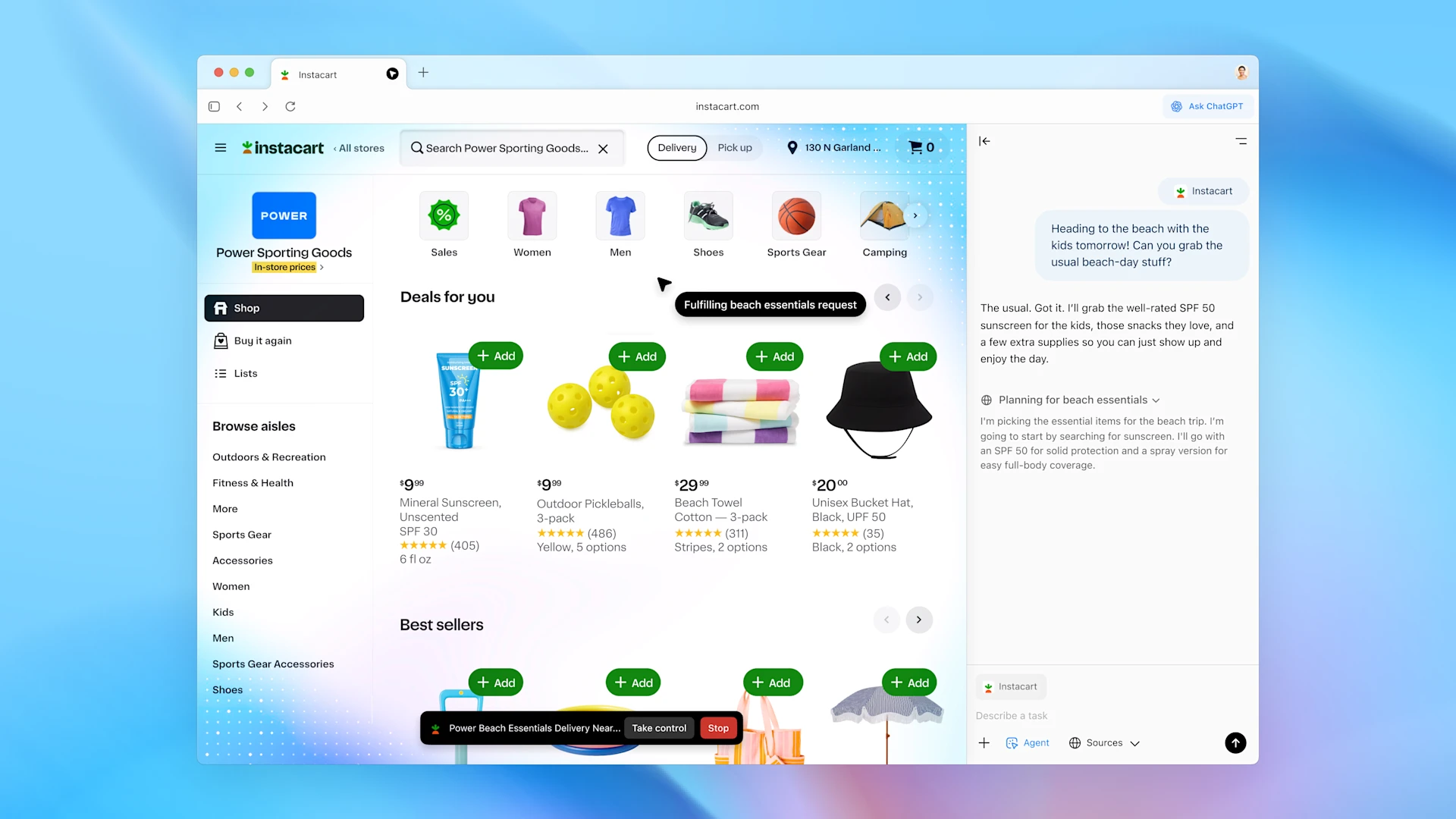

OpenAI's ChatGPT Atlas browser launched Tuesday with ambitious promises: an AI-powered browser that doesn't just search the web but actively completes tasks on your behalf—booking hotels, researching products, filling forms, and navigating sites while you focus on higher-value work. By Wednesday morning, cybersecurity experts were demonstrating successful prompt injection attacks that could turn the helpful assistant into a data extraction tool.

The rapid discovery of vulnerabilities isn't a failure of OpenAI's engineering—it's a symptom of a deeper architectural challenge facing every company racing to deploy AI agents. As MIT Professor Srini Devadas warned: "The challenge is that if you want the AI assistant to be useful, you need to give it access to your data and your privileges, and if attackers can trick the AI assistant, it is as if you were tricked."

For enterprises already struggling with 95% AI pilot failure rates, Atlas reveals why deployment trust remains the biggest barrier to AI value—and why a fundamentally different approach is required.

What Makes Atlas Different (And Risky)

Unlike traditional browsers with AI sidebars, Atlas is built with ChatGPT at its core. It offers two capabilities that fundamentally change the browser's role:

Browser Memories: ChatGPT remembers which sites you visit, how you interact with them, and context from past browsing sessions—creating a comprehensive behavioral profile that makes responses increasingly personalized.

Agent Mode: The AI can autonomously open tabs, read content, fill forms, and navigate sites on your behalf. It's not just answering questions—it's taking actions with your credentials and access privileges.

This represents a massive leap in AI capability. It also creates what security researcher Simon Willison called "insurmountably high" security and privacy risks.

The Security Vulnerabilities Discovered Within 24 Hours

Cybersecurity researchers wasted no time testing Atlas's defenses. By Wednesday, multiple teams had demonstrated successful exploits:

Prompt Injection Attacks: Malicious instructions embedded in websites can manipulate the AI agent's behavior without users realizing it. One researcher showed Atlas changing browser settings from dark to light mode when reading a doctored Google Doc. Another demonstrated "clipboard injection," where hidden code overwrites the user's clipboard with malicious links.

Data Exfiltration Risks: As UC London professor George Chalhoub explained: "It collapses the boundary between data and instructions. It could turn an AI agent from a helpful tool into a potential attack vector—extracting all your emails, stealing personal data from work, logging into your Facebook account and stealing messages, or extracting passwords."

The Fundamental Problem: These aren't bugs to be patched—they're inherent to how large language models process natural language. OpenAI's Chief Information Security Officer Dane Stuckey acknowledged this: "Prompt injection remains a frontier, unsolved security problem."

Even Brave's security team, which developed its own AI features, concluded after testing: "What we've found confirms our initial concerns: indirect prompt injection is not an isolated issue, but a systemic challenge facing the entire category of AI-powered browsers."

Why Atlas Isn't Enterprise-Ready (And OpenAI Knows It)

OpenAI's own enterprise documentation reveals telling limitations:

- Not covered by SOC 2 or ISO certifications

- Cannot be used with regulated data (PHI, payment card data)

- No SIEM integration or Compliance API logs

- Limited audit trail capabilities

- No region-specific data residency

- Enterprise access is OFF by default

OpenAI explicitly warns: "Do not use Atlas with regulated, confidential, or production data." For Business users, it's positioned as a beta for "low-risk data evaluation" only.

This isn't cautious messaging—it's an acknowledgment that autonomous AI agents operating in production environments with sensitive data represent unacceptable risk.

The Trust Gap That's Blocking AI Value

Atlas's vulnerabilities illuminate a broader challenge plaguing enterprise AI adoption: the gap between AI's potential value and organizations' ability to trust it with business-critical operations.

Companies face a paradox:

To be useful, AI agents need access to your systems, data, and privileges—the same access levels you'd give a trusted employee.

To be secure, AI systems require isolation, sandboxing, and restricted access—limitations that prevent them from delivering transformative value.

This explains why businesses continue to struggle with AI deployment despite massive investments:

- They want AI-powered automation for efficiency gains

- But they can't trust AI enough to deploy it on critical processes

- So AI remains confined to low-risk pilots that never deliver ROI

- Which reinforces skepticism about AI's business value

Sound familiar? This is exactly the dynamic behind MIT's finding that 95% of AI pilots fail to deliver measurable returns.

What Atlas Teaches Us About Enterprise AI Strategy

The Atlas launch—and rapid security discoveries—offers three critical lessons for enterprises:

1. Autonomy and Trust Are Inversely Proportional

The more autonomous the AI agent, the less you can trust it. Atlas's agent mode represents maximum autonomy—and therefore maximum risk. For business-critical operations where mistakes have severe consequences, fully autonomous AI is premature.

2. "Trust Me" Isn't a Security Strategy

Stuckey's stated goal is for users to "trust ChatGPT agent to use your browser, the same way you'd trust your most competent, trustworthy, and security-aware colleague or friend." But trust between humans is built on accountability, judgment, and the ability to explain decisions—capabilities LLMs fundamentally lack.

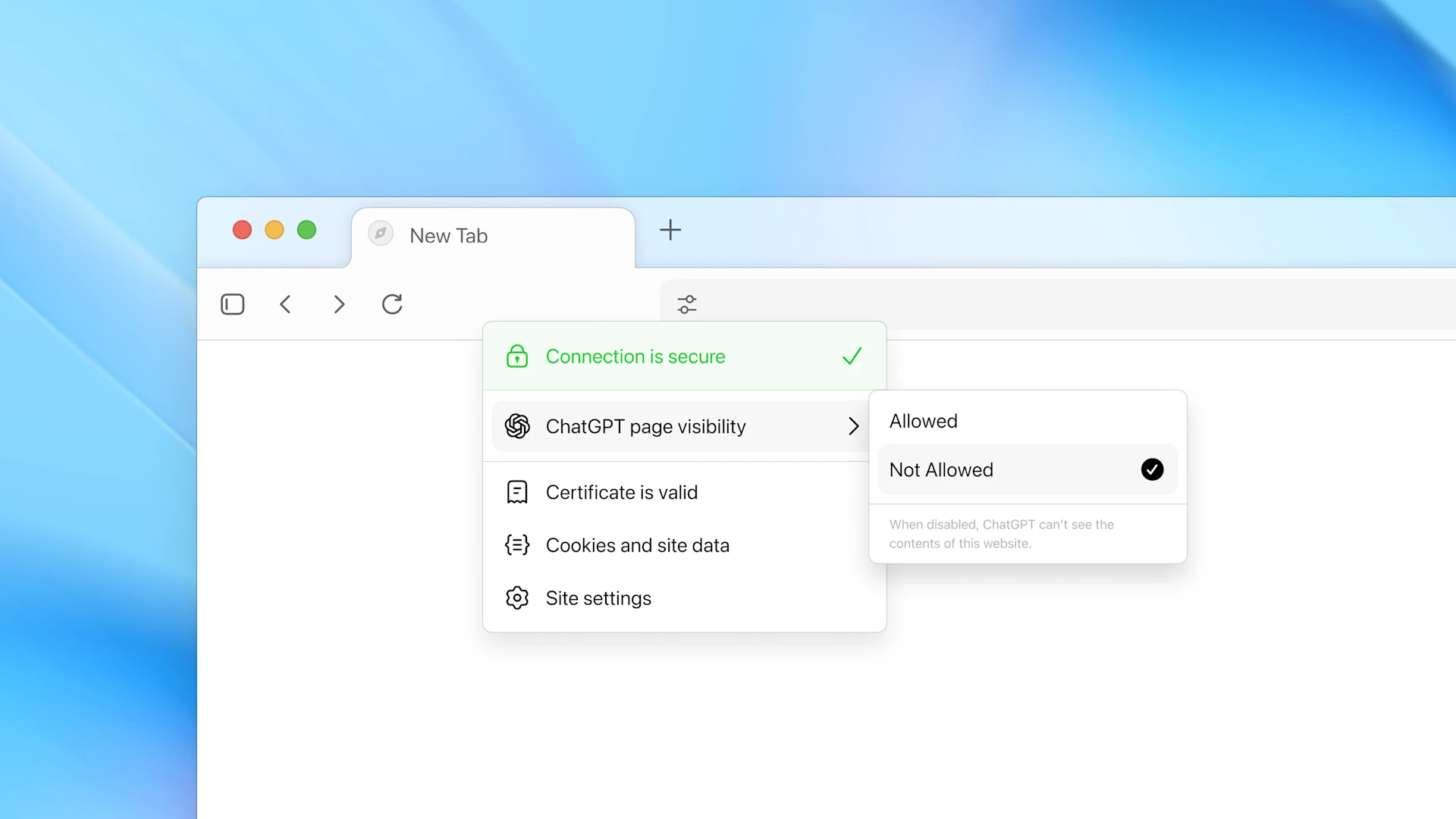

3. Human Oversight Isn't Optional—It's the Architecture

OpenAI's own guidance reveals the required approach: "Users should weigh tradeoffs when deciding what information to provide to the agent, as well as take steps to minimize exposure such as... monitoring agent's activities."

The admission is buried in documentation, but it's critical: AI agents require active human supervision to be safe. The question isn't whether to include humans—it's how to architect that oversight effectively.

The Alternative: Human-in-the-Loop AI by Design

While Atlas represents one approach to AI agents—maximum autonomy with post-hoc safeguards—enterprises deploying AI in production are discovering a more reliable architecture: human-in-the-loop (HITL) systems that combine AI capability with human judgment from the start.

Rather than building fully autonomous agents that might make catastrophic errors, HITL systems orchestrate AI and human expertise in workflows designed for verified outcomes:

For data quality: AI can process and label massive datasets quickly, but human experts validate accuracy on uncertain cases—creating training data you can trust and models that improve continuously.

For inference evaluation: AI can analyze model outputs at scale, but human validators catch edge cases, biases, and errors that would erode trust if deployed unchecked.

For business process automation: AI can handle routine tasks efficiently, but human oversight prevents the kinds of errors that Atlas's prompt injection vulnerabilities could cause—extracting sensitive data, accessing wrong accounts, or taking unauthorized actions.

This isn't AI with humans as a fallback—it's architecting AI systems where human expertise is integrated as a core component, providing the confidence gap that makes production deployment possible.

What Enterprises Should Do Now

As AI agent capabilities advance rapidly, CIOs and technology leaders face critical decisions about adoption strategy:

Don't Wait for Perfect AI: The security challenges Atlas revealed aren't going away soon. Prompt injection is described by experts as "unsolved" and "insurmountably high." Waiting for risk-free autonomous agents means indefinitely delaying AI value.

Architect for Trust from Day One: Build AI systems with verification and oversight as core components, not afterthoughts. This means:

- Defining clear accuracy requirements before deployment

- Establishing human validation workflows for uncertain outputs

- Implementing continuous monitoring and feedback loops

- Creating audit trails for model decisions

Start with High-Impact, Data-Rich Use Cases: Following the lessons from the MIT study, identify processes where:

- AI provides measurable advantages over existing solutions

- You have sufficient quality data to train reliable models

- Errors can be caught and corrected before business impact

- Success can be objectively measured

Prioritize Verified Outcomes Over Autonomy: For business-critical operations, the goal isn't maximum autonomy—it's trusted results at scale. Systems that deliver 99.9% accuracy with human oversight often provide more business value than 95% accurate fully autonomous systems that no one trusts enough to deploy.

The Path Forward: Trust-First AI Architecture

Atlas represents an important milestone in AI capability—browsers that understand context and complete tasks. But the security vulnerabilities discovered within 24 hours of launch confirm what enterprises implementing production AI already knew: autonomous agents without human oversight aren't ready for business-critical operations.

The companies succeeding with AI aren't the ones deploying fully autonomous agents and hoping for the best. They're the ones building trust-first architectures that combine AI's scale and speed with human expertise for accuracy and judgment.

CloudFactory's AI platform demonstrates this approach—orchestrating AI capabilities with human expertise in workflows designed to deliver verified outcomes. By architecting human oversight as a core system component rather than an emergency failsafe, enterprises can deploy AI on critical processes with the confidence needed to drive measurable business value.

As the race for AI agent capabilities intensifies, the winners won't be the companies with the most autonomous AI—they'll be the ones who figured out how to make AI trustworthy enough to deploy at scale.

Ready to build AI systems your business can actually trust? Contact our team to discuss how human-in-the-loop AI architecture can help your organization move from pilots to production with confidence.

.png?width=1563&height=1563&name=Untitled%20design%20(38).png)

.png?width=1563&height=1563&name=Untitled%20design%20(30).png)

.png?width=1563&height=1563&name=Untitled%20design%20(33).png)

.png?width=1563&height=1563&name=Untitled%20design%20(34).png)