The data annotation tools you use to enrich your data for training and deploying machine learning models can determine success or failure for your AI project. Your tools play a critical role in whether you can create a high-quality, high-performing model that powers a disruptive artificial intelligence solution or solves a painful, expensive problem, or end up investing time and resources on a failed experiment.

Choosing your tool may not be a fast or easy decision. The data annotation tool ecosystem is changing quickly as more providers offer options for an increasingly diverse array of use cases. Advancements in AI data collection and tooling occur rapidly, sometimes by the week. These changes bring improvements to existing tools and new tools for emerging use cases,

including those in semantic segmentation and other specialized areas.

The challenge is thinking strategically about your tooling needs now and into the future. New tools, more advanced features, and changes in options such as storage and security make your tooling choices more complex. Additionally, an increasingly competitive marketplace makes it challenging to discern hype from real value when selecting the best data annotation services and tools for your ML models.

We’ve called this an evolving guide because we will update it regularly to reflect changes in the data annotation tool ecosystem and advancements in annotation platforms. Be sure to check back regularly for new information, and you can bookmark this page.

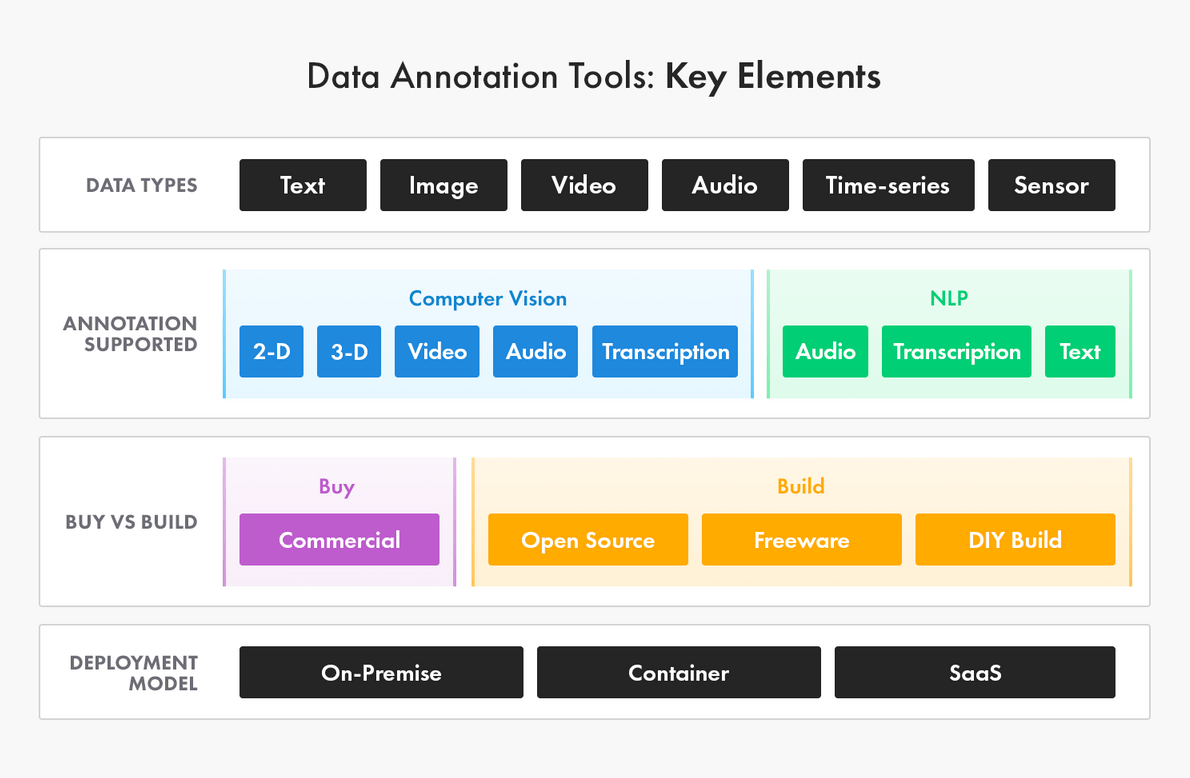

In this guide, we’ll cover data annotation tools for computer vision and NLP (natural language processing) for supervised learning.

First, we’ll explain the idea of data annotation tools in more detail, introducing you to key terms and concepts. Next, we will explore the pros and cons of building your own tool versus purchasing a commercially available tool or leveraging open source options.

We’ll give you considerations for choosing your tool and share our short list of the best data annotation tools available. You’ll also get a short list of critical questions to ask your tool provider.

Table of Contents

Introduction:

Will this guide be helpful to me?

This guide will be helpful if:

- You are beginning a machine learning project and have data you want to clean and annotate to train, test, and validate your model.

- You are working with a new data type and need to understand the best tools available for annotating that data.

- Your data annotation needs have evolved (e.g., you need to add features to your annotation) and want to learn about tools that can handle what you’re doing today and what you’re adding to your process.

- You are in the production stage and must verify models using human-in-the-loop.

The Basics:

Data Annotation Tools and Machine Learning

What is Data Annotation?

In machine learning, data annotation is the process of labeling or tagging data to make it understandable for machine learning algorithms. You are marking, labeling, tagging, transcribing, or processing a dataset with the features you want your machine learning system to learn to recognize. Once deployed, your model should be able to identify these features independently and make decisions or take actions accordingly.

Annotated data reveals features that train your algorithms to identify the same patterns in unannotated data. This process is foundational in supervised learning and semi-supervised learning models that involve supervised components.

What Are Data Annotation Tools?

A data annotation tool is a cloud-based, on-premise, or containerized software solution that can be used to annotate production-grade training data for machine learning. These tools help label various types of data, such as images, videos, text, audio, and sensor data, to make it usable for AI models. While some organizations take a do-it-yourself approach and build their own tools, there are many data annotation tools available through open-source, freeware.

They are also offered commercially, for lease and purchase. Data annotation tools are generally designed to be used with specific types of data, such as image, video, text, audio, spreadsheet, or sensor data. They also offer different deployment models, including on-premise, container, SaaS (cloud), and Kubernetes, each providing distinct benefits based on the needs of the project.

6 Important Data Annotation Tool Features

1) Dataset management

Annotation begins and ends with a comprehensive way of managing the dataset you plan to annotate. As a critical part of your workflow, you need to ensure that the tool you are considering will actually import and support the high volume of data and file types you need to label. This includes searching, filtering, sorting, cloning, and merging of datasets.

Different tools can save the output of annotations in different ways, so you’ll need to make sure the tool will meet your team’s output requirements. Finally, your annotated data must be stored somewhere. Most tools will support local and network storage, but cloud storage - especially your preferred cloud vendor - can be hit or miss, so confirm support-file storage targets.

2) Annotation methods

This is obviously the core feature of data annotation tools - the methods and capabilities to apply labels to your data. But not all tools are created equal in this regard. Many tools are narrowly optimized to focus on specific types of labeling, while others offer a broad mix of tools to enable various types of use cases.

Nearly all offer some type of data or document classification to guide how you identify and sort your data. Depending on your current and anticipated future needs, you may wish to focus on specialists or go with a more general platform. The common types of annotation capabilities provided by data annotation tools include building and managing ontologies or guidelines, such as label maps, classes, attributes, and specific annotation types.

Here are just a few examples:

- Image or video: Bounding boxes, polygons, polylines, classification, 2-D and 3-D points, or segmentation (semantic or instance), tracking, transcription, interpolation, or transcription.

- Text: Transcription, sentiment analysis, net entity relationships (NER), parts of speech (POS), dependency resolution, or coreference resolution.

- Audio: Audio labeling, audio to text, tagging, time labeling

An emerging feature in many data annotation tools is automation, or auto-labeling. Using AI, many tools will assist your human labelers to improve their annotations (e.g. automatically convert a four-point bounding box to a polygon), or even automatically annotate your data without a human touch. Additionally, some tools can learn from the actions taken by your human annotators, to improve auto-labeling accuracy.

Some annotation tasks are ripe for automation. For example, if you use pre-annotation to tag images, a team of data labelers can determine whether to resize or delete a bounding box. This can shave time off the process for a team that needs images annotated at pixel-level segmentation. Still, there will always be exceptions, edge cases, and errors with automated annotations, so it is critical to include a human-in-the-loop approach for both quality control and exception handling.

Automation also can refer to the availability of developer interfaces to run the automations. That is, an application programming interface (API) and software development kit (SDK) that allow access to and interaction with the data.

3) Data quality control

The performance of your machine learning and AI models will only be as good as your data. Data annotation tools can help manage the quality control (QC) and verification process. Ideally, the tool will have embedded QC within the annotation process itself.

For example, real-time feedback and initiating issue tracking during annotation is important. Additionally, workflow processes such as labeling consensus, may be supported. Many tools will provide a quality dashboard to help managers view and track quality issues, and assign QC tasks back out to the core annotation team or to a specialized QC team.

4) Workforce management

Every data annotation tool is meant to be used by a human workforce - even those tools that may lead with an AI-based automation feature. You still need humans to handle exceptions and quality assurance as noted before. As such, leading tools will offer workforce management capabilities, such as task assignment and productivity analytics measuring time spent on each task or sub-task.

Your data labeling workforce provider may bring their own technology to analyze data that is associated with quality work. They may use technology, such as webcams, screenshots, inactivity timers, and clickstream data to identify how they can support workers in delivering quality data annotation.

Most importantly, your workforce must be able to work with and learn the tool you plan to use. Further, your workforce provider should be able to monitor worker performance and work quality and accuracy. It’s even better when they offer you direct visibility, such as a dashboard view, into the productivity of your outsourced workforce and the quality of the work performed.

5) Security

Whether annotating sensitive protected personal information (PPI) or your own valuable intellectual property (IP), you want to make sure that your data remains secure. Tools should limit an annotator’s viewing rights to data not assigned to her, and prevent data downloads. Depending on how the tool is deployed, via cloud or on-premise, a data annotation tool may offer secure file access (e.g., VPN).

For use cases that fall under regulatory compliance requirements, many tools will also log a record of annotation details, such as date, time, and the annotation author. However, if you are subject to HIPAA, SOC 1, SOC 2, PCI DSS, or SSAE 16 regulations, it is important to carefully evaluate whether your data annotation tool partner can help you maintain compliance.

6) Integrated labeling services

As mentioned earlier, every tool requires a human workforce to annotate data, and the people and technology elements of data annotation are equally important. As such, many data annotation tool providers offer a workforce network to provide annotation as a service. The tool provider either recruits the workers or provides access to them via partnerships with workforce providers.

While this feature makes for convenience, any workforce skill and capability should be evaluated separately from the tool capability itself. The key here is that any data annotation tool should offer the flexibility to use the tool vendor’s workforce or the workforce of your choice, such as a group of employees or a skilled, professionally managed data annotation team.

Build vs. Buy: A Critical Decision for Data Annotation Tools

Just a few years ago, there weren’t many data annotation tools available to buy. Most early movers had to use what was available via open source or build their own tools if they wanted to apply AI to solve a painful business problem or create a disruptive product.

Starting in about 2018, a wave of commercial data annotation tools became available, offering full-featured, complete-workflow commercial tools for data labeling. The emergence of these third-party, professionally developed tools began to force a discussion within data science and AI project teams around whether to continue to take a DIY approach and build their own tools or purchase one. And if the answer was to purchase a data annotation tool, they still needed to decide how to select the right tool for their project.

When to build your own data annotation tool

Even though there are third-party tools available to purchase, it may still make business sense to build a data annotation tool. Building your own tool provides you with the ultimate level of control - from the end-to-end workflow of the annotation process, to the type of data you can label and the resulting outputs.

And, as you continue to iterate your business processes and your machine learning models, you can make changes quickly, using your own developers and setting your own priorities. You also can apply technical controls to meet your company’s unique security requirements. And finally, an organization may want to include all of their AI tooling in their intellectual property, and building a data annotation tool internally allows them to do that.

However, when you’re building a tool, you often face many unknowns at the beginning, and the scope of tool requirements can quickly shift and evolve, causing teams to lose time. There is also the additional overhead of standing up the infrastructure needed to develop and run the tooling, as well as development resources required to maintain the data annotation tool.

When to buy a data annotation tool

Generally, buying a tool that is commercially available can be less expensive because you avoid the upfront development and ongoing direct support expenses. This allows you to focus your time and resources on your core project:

- Without the distraction of supporting and expanding features and capabilities for an in-house tool that is custom-built; and

- Without bearing the ongoing burden of funding the tool to ensure its continued success.

Buying an existing data annotation tool can accelerate your project timeline, enabling you to get started more quickly with an enterprise-ready, tested data labeling tool. Additionally, tooling vendors work with many different customers and can incorporate industry best practices into their data annotation tools. Finally, when it comes to features, you can usually configure a commercial tool to meet your needs, and there are more than one of these kinds of tools available for any data annotation workload.

Of course, a third-party data annotation tool is not typically built with your specific use case or workflow in mind, so you may sacrifice some level of control and customization. And as your project or product evolves, you may find that your data annotation tool requirements change over time. If the tool you originally bought doesn’t support your new requirements, you will need to build or buy integrations or separate tools to meet your new needs.

| BUILD | BUY | |

| PROS |

|

|

| CONS |

|

|

The open source option for data annotation tools

There are open source data annotation tools available. You can use an open source tool and support it yourself, or use it to jump-start your own build effort. There are many open source projects for tooling related to image, video, natural language processing, and transcription, and such a tool can be a great option for a one-time project.

But often an open source tool will present challenges when you try to scale your project into production, as these tools are typically designed around a single user and offer poor or insufficient workflow options for a team of data labelers. Additionally, you need to have the technical expertise on hand to deploy and maintain the tool. Many people are lured by open source being “free” and forget to factor in the total cost of ownership - the time and expense required to develop the workflows, workforce management, and quality assurance management that are necessary and inherently present in commercial data annotation tools.

Using Growth Stage to Decide: Buy vs. Build for Data Annotation Tools

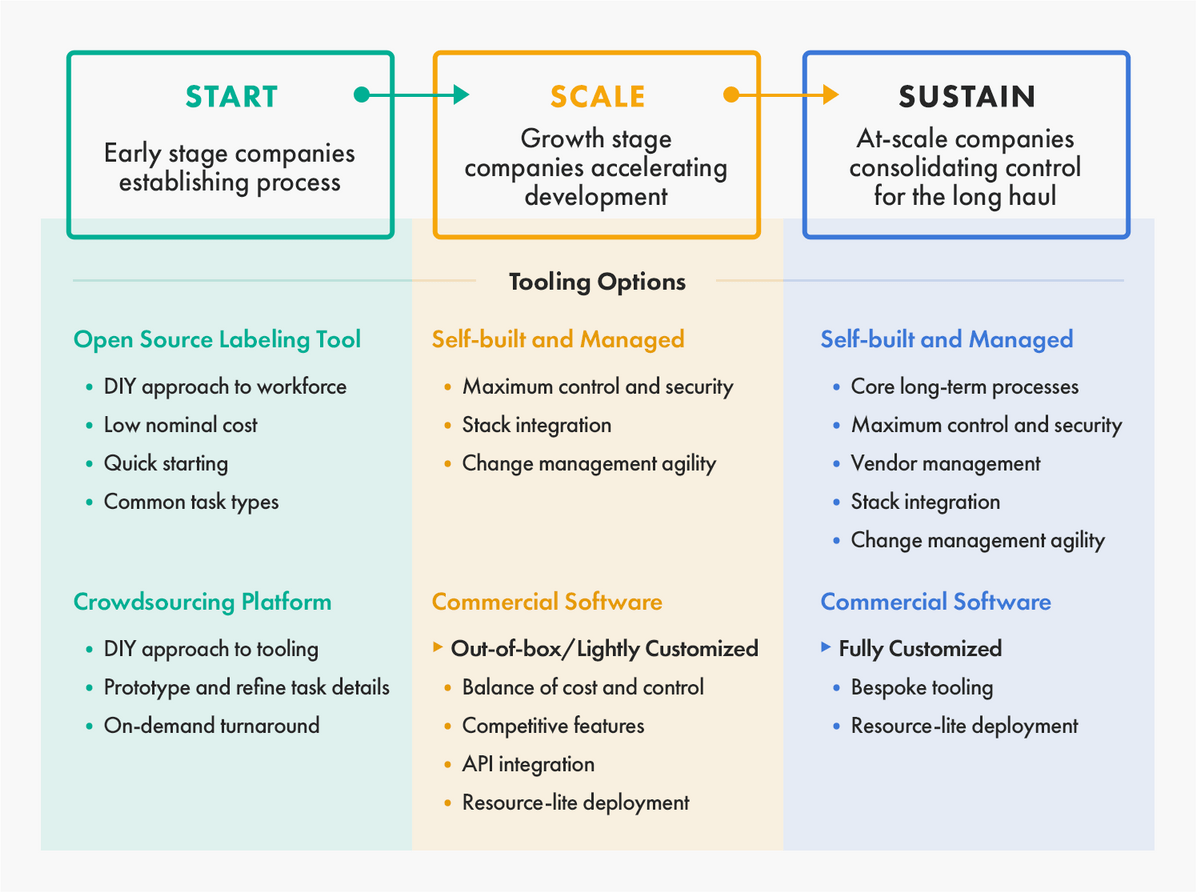

Another helpful way to look at the build versus buy question is to consider your stage of organizational growth.

- Start: In the early stages of growth, freeware or open source data annotation tools can make sense if you have development resources and you want to build your own tool. You also could choose a workforce that provides a data annotation tool. But be careful not to unnecessarily tie your data annotation tool to your workforce; you’ll want the flexibility to make changes later.

- Scale: If you’re at the growth stage, you might want the ability to customize commercial data annotation tools, and you can do that with little to no development resources. If you build, you’re going to need to allocate resources to maintain and improve your tool. Keep in mind to consider existing storage and, if you use a cloud vendor, make sure they can work with your requirements.

- Sustain: When you’re operating at scale, it’s likely to be important for you to have control, enhanced data security, or the agility to make changes, such as feature enhancements. In that case, open source tools that are self-built and managed might be your best bet.

How to Choose a Data Annotation Tool

There is a lot to consider in the build vs buy equation. If, after considering all of the factors, you conclude that the time and expense is not worth a DIY approach and the potential gain of customization and retaining IP, then the next decision you will need to make is about which commercial tool you choose to purchase. In this section we will explore some of those considerations.

1) What is your use case?

First and foremost, the type of data you want to annotate and your business processes for doing the work will influence your tool choice. There are tools for labeling text, image, and video. Some image labeling tools also have video labeling capabilities.

Of note, more and more data annotation tool providers are realizing they want to do more than provide a singular tool - they want to provide a holistic technology platform for data annotation for machine learning. A simple data annotation tool provides features that make it easy to enrich the data. A platform provides an environment that supports the data annotation and AI development process.

A platform may include features such as multiple annotation options (e.g., 2-D, 3-D, audio, text), more than one storage option (e.g., local, network, cloud), or quality control workflow. It also may be able to accept pre-annotated data or may include embedded neural networks that learn from manual annotations made using the platform. Considering a platform may be helpful if you anticipate your project or product needs evolving significantly over time, as a platform may provide greater flexibility in the future.

2) How will you manage quality control requirements?

How you want to measure and control quality is also an important consideration for your data annotation tool. Many commercially-available tools have quality control (QC) features built-in that can review, provide feedback, and correct tasks. For example, QC options might include:

- Consensus - Annotator agreement determines quality. For example, when annotators disagree on an edge case, the task is passed to a third annotator or more until a percentage of certainty is reached. Feedback can be provided to the workforce to learn how to correctly annotate those edge cases.

- Gold standard - The correct answer is known. The tool measures quality based on correct and incorrect tasks.

- Sample review - The tools reviews a random sample of completed tasks for accuracy.

- Intersection over union (IoU) - This is a consensus model used in object detection within images. It compares your hand-annotated, ground-truth images with the annotations your model predicts.

Some tools can even automate a portion of your QC. However, whenever you are using automation for a portion of your data labeling process, you will need people to perform QC on that work. For example, optical character recognition (OCR) software has an error rate of 1% to 3% per character. On a page with 1,800 characters, that’s 18-54 errors. For a 300-page book, that’s 5,400-16,200 errors. You will want a process that includes a QC layer performed by skilled labelers with context and domain expertise.

3) Who will be using the tool?

An often overlooked aspect of tool selection is workforce. Whether your data is annotated by employees or contractors, crowdsourcing, or an outsourcing provider, your workforce will need access to and training to use your data annotation tool, with specific task instructions unique to your use case. Make sure you take into account the answers to these questions:

- Do you have access to a workforce that has pre-existing knowledge of viable commercial tools for your project?

- Does that team have prior experience using the tool(s) you are considering?

- If not, do you have detailed documentation and a proven training approach to bring the workforce up to speed?

- Do you have a process by which you can ensure the required level of quality for your project?

4) Do you need a vendor or a partner?

The company you buy a data annotation tool from can be just as important as the tool itself. Here, you’ll want to consider how easy it is to do business with the company that’s providing the tool and their openness for collaboration. AI development is an iterative process, and you will need to make changes along the way. Are they willing to consider feedback or ideas for new features for their tool that would make your tasks easier or make your AI models run cleaner and with better results? Aim to find a partner who is willing to work with you on such things, not simply a vendor to provide a tool.

As you research your workforce options, you may discover some data labeling services that provide their own tool. However, be careful not to tie your tool to your workforce unnecessarily. You’ll want the flexibility to change either your workforce or your tool, based on your business needs and the solutions available to you, especially as new tools and workforce options emerge. A data labeling service should be able to provide best practices and share recommendations for choosing your tool based on their workforce strategy.

Also, keep in mind that your annotation tasks are likely to change over time. Every machine learning modeling task is different. The set of instructions you are using to collect, clean, and annotate your data today may change in the coming weeks - even days. Anticipating those changes is helpful, and you’ll want to consider that when you’re making the decision about the data annotation tool you select and the workforce that will use it to label your data.

The Best Data Annotation Tools:

Commercial and Open Source

Here’s a closer look at some of the data annotation tools we consider to be among the best available on the market today.

Commercial Data Annotation Tools

Commercially-viable data annotation tools are likely your best choice, particularly if your company is at the growth or enterprise stage. If you are operating at scale and want to sustain that growth over time, you can get commercially-available tools and customize them with few development resources of your own.

Open Source Data Annotation Tools

Open source data annotation tools allow you to use or modify the source code. You can change or customize features to fit your needs. Developers who use open source tools are part of a collaborative community of users who can share use cases, best practices, and feature improvements made by altering the original source code.

Open source tools can give you more control over features and can provide great flexibility as your tasks and data operations evolve. However, using open source tools comes with the same commitment as building your own tool. You will have to make investments to maintain the platform over time, which can be costly.

While open source tools can be good for learning or testing early versions of a commercial application, they often present barriers to scale. This is because most open source tools are not comprehensive labeling solutions and lack robust dataset management, label automation, or other features that drive efficiency (like data clustering). In addition, few open source tools provide quality assurance workflows or accuracy analytics which can hinder data quality.

It’s important to know that open source communities provide support mostly via on-line documentation, FAQs, and tutorials. There are no support numbers to call and some open source tools don’t provide data privacy and security measures needed to comply with GDPR and HIPAA.

There are several open source data annotation tools available, many of which have been available for years and have improved over time.

Below are the commercial tools and two open source tools that we recommend and work with extensively. As you can see, there isn’t one tool that does all, but several tools with special capabilities for particular use cases.

| Commercial Tools | Open Source Tools | |||||||||||

| Dataloop | Datasaur | Encord | Hasty | Labelbox | Pix4D | Pointly | Segments.ai | CVAT | QGIS | |||

| Supported Annotation Type | Computer Vision | 2-D | ||||||||||

| Geospatial and Orthomosaic | ||||||||||||

| 3-D Point Cloud & LiDAR | ||||||||||||

| Video | ||||||||||||

| DICOM | ||||||||||||

| NLP | Audio Transcription | |||||||||||

| Named Entity Recognition | ||||||||||||

| Intelligent Document Processing | ||||||||||||

| Deployment | Options | On-premise | ||||||||||

| Virtual Private Cloud | ||||||||||||

| SaaS | ||||||||||||

| External Storage Integration | ||||||||||||

Iteration & Evolution: Adapting to Changing Data Annotation Needs and New Tools

You will uncover buy vs. build implications throughout your product development lifecycle. From sourcing the data to labeling, modeling, deployment, and improvements - your data annotation tool plays a key role in your project’s success. That’s why your tool choice is so important - because it affects your workflow from the beginning stages of model development through model testing and into production.

With a market size of USD $805.6 million in 2022, data annotation tools will expand as adoption of data annotation tools increases in the automotive, retail, and healthcare industries. As new options emerge, you may want to consider what is available to you.

Why change data annotation tools?

As you train, test, and validate your model - and even as you tune it in production, your data annotation needs may change. A tool that was built for your first purpose might not serve you as well in the future as your use case, tasks, and business rules evolve. That’s why it’s important to avoid getting into a long-term contract with a single tool or workforce provider - or tying your tool to your workforce.

Here are a few examples of reasons you might want to change your tool during a project:

- You began building a tool but are now considering buying because commercial tools have added new features that meet your needs.

- The tool doesn’t have the automation or the automation features you want.

- Your cost increases for access to the commercial tool.

How do I change data annotation tools?

When you change your data annotation tool in the middle of training or production, you’ll likely ask the same questions you’d ask if you were buying the tool for a new project. However, there will be considerations regarding the ease of transferring your data into a new tool and resuming data annotation in the new tool.

For example, you will have to anticipate and manage details related to:

- Introducing a different data ingestion pipeline

- How data is stored

- Output format

- Use of a new tool - and training your data workers to use it

- Your workforce provider’s technology to track the quality and productivity of its workers, and how they capture the data required to do it.

While we know it’s important to be flexible when it comes to your data annotation tool, we have yet to learn how long one tool can meet your needs and how long you should wait before evaluating your options again. The data annotation tool ecosystem is just gathering steam, and those who were among the first teams to monetize their data annotation tools are just starting to renew contracts with their earliest adopters.

This is one aspect of the market we’re watching so we can provide exceptional consultative service to our clients and ensure they are using the best-fit tool for their needs.

Questions to Ask Your Data Annotation Tool Provider

Here are questions to keep in mind when you’re speaking with a data annotation tool provider:

Strategic Approach

- Of all of the features available with your tool, what does your team consider to be your tool’s specialty - and why?

- How long have you been building, maintaining, and supporting this data annotation tool?

- How is your tool different from other commercially-available tools?

- Do you consider your product to be a tool or a platform? What other aspects of the machine learning data labeling process does your tool support?

- Is your team open to receiving feedback about your data annotation tool, its features, and ways it could be improved to better serve the needs of our use case?

- What are your pricing methods? (e.g., monthly, annual, by annotation, by worker)

Key Features

- Do you offer dataset management?

- Where can files be stored? What capacity does the tool support, in terms of how much data can be moved into the tool? Can I upload pre-annotated images into the tool?

- Do you offer an API and/or SDK? If so, how robust are they?

- Do you offer data management?

- Can I bulk upload classes and attributes into the tool?

- Does your tool allow us to deploy a large and growing workforce to use it?

- What security compliance or certifications does your tool have?

Quality

- Is quality control (QC) built into your tooling platform? What does that workflow look like?

- What kind of quality assurance (QA) do you provide?

Machine Learning

- Have you built any AI into your tool?

- Can I bring my own algorithm and plug it into your tool?

Tool Agnostic:

The CloudFactory Advantage

Though the specific tools suggested above are a great place to start, it’s best to avoid dependence on any single platform for your data annotation needs. After all, no two datasets present exactly the same challenges, and no particular tool will be the best option in all circumstances. Because training data challenges are unique and dynamic in nature, tying your workforce to one tool can be a strategic liability.

For a more flexible approach to labeling text, images, and video, you’ll need to develop a versatile team that can adapt to new tools. At CloudFactory, this emphasis on versatility guides how we select and train our cloud workers. We hire team members with the skills to work on any platform our clients prefer. No matter the tool you use or the type of training data you need, we have workers ready and able to get started.

The People + Process Component

The maturity of your data annotation tool and its features impact how you and your data workforce will design workflow, quality control, and many other aspects of your data work. A tool that doesn’t take your workforce and your processes into consideration will cost you time and efficiency in building workarounds for things that you’ll wish were native within the tool.

CloudFactory delivers the people and the process, and we know data annotation because we’ve been doing it for the better part of a decade, working remotely for our clients. Our data annotation teams are vetted, trained, and actively managed to deliver higher engagement, accountability, and quality.

- Work from anywhere - We work how you work, as an extension of your team. We can use any tool and follow the rules you set. Using our proprietary platform, you have direct communication with a team leader to provide feedback. Workers can share their observations to drive improved processes, higher productivity, and better quality.

- Scale the work - We can flex up or down, based on your business requirements.

- Select and train top-notch workers - Our workforce strategy values people, and we make sure workers understand the importance of the tasks they are doing for your business. We monitor worker performance for productivity and quality, and our team leaders come alongside workers to train and encourage them.

- Flexible pricing model - You can scale work up or down without renegotiating your contract. We do not lock you into a long-term contract or tie our workforce to your tool.

Are you ready to select the right data annotation tool? Find out how we can help you save time and money.

Reviewers

Anthony Scalabrino, sales engineer at CloudFactory, a provider of professionally managed teams for data annotation for machine learning.

Nir Buschi, Co-founder & Chief Business Officer at Dataloop AI, an enterprise-grade data platform for AI systems in development and in production, providing an end-to-end data workflow including data annotation, quality control, data management, automation pipelines and autoML.

Contact Sales

Fill out this form to speak to our team about how CloudFactory can help you reach your goals.

Frequently Asked Questions

What is annotated data?

In supervised or semi-supervised machine learning, annotated data is labeled, tagged, or processed for the features you want your machine learning system to learn to recognize. An example of annotated data is sensor data from an autonomous vehicle, where the data has been enriched to show exactly where there are pedestrians and other vehicles.

What is a data annotator?

A data annotator is:

- Someone who works with data and enriches it for use with machine learning; or

- An auto labeling feature, or automation, that is built into a data annotation tool to enrich data. That automation is powered by machine learning that makes predictions about your annotations based on the training data it has consumed and the tuning of the model during testing and validation.

What is data annotation?

In supervised or semi-supervised machine learning, data annotation is the process of labeling data to show the outcome you want your machine learning model to predict. You are enriching - also known as labeling, tagging, transcribing, or processing - a dataset with the features you want your machine learning system to learn to recognize. Ideally, once you deploy your model, the machine will be able to recognize those features on its own and make a decision or take some action as a result.

What are data annotation tools?

Data annotation tools are cloud-based, on-premise, or containerized software solutions that can be used to label or annotate production-grade training data for machine learning. They can be available via open source or freeware, or they may be offered commercially, for lease. Data annotation tools are designed to be used with specific types of data, such as image, text, audio, spreadsheet, sensor, photogrammetry, or point-cloud data.

What is an image annotation tool?

An image annotation tool is a cloud-based, on-premise or containerized software solution that can be used to label, tag, or annotate images or frame-by-frame video for production-grade training data for machine learning. Features may include bounding boxes, polygons, 2-D and 3-D points, or segmentation (semantic or instance), or transcription. Some image annotation tools include quality control features such as intersection over union (IoU), a consensus model used in object detection within images. It compares your hand-annotated, ground-truth images with the annotations your model predicts.

What’s the best image annotation tool?

The best image annotation tool will depend on your use case, data workforce, size and stage of your organization, and quality requirements. Dataloop, Encord, Hasty, Labelbox, Pix4D, Pointly, and Segments.ai offer commercial annotation tools to label images that are used to train, test, and validate machine learning algorithms. CVAT and QGIS are open source tools you can use and customize for your own image annotation needs.

What is a video annotation tool?

A video annotation tool is a cloud-based, on-premise or containerized software solution that can be used to label or annotate video or frame-by-frame images from video for production-grade training data for machine learning. It can be available via open source or freeware, or it may be offered commercially, for lease. Features may include bounding boxes, polygons, 2-D and 3-D points, or segmentation (semantic or instance).

What’s an online annotation tool?

An online annotation tool is a cloud-based, on-premise, or containerized software solution that can be used to label or annotate production-grade training data for machine learning. It can be available via open source or freeware, or it may be offered commercially. Online annotation tools are designed to be used with specific types of data, such as image, text, video, audio, spreadsheet, or sensor data.

What are text annotation tools?

Text annotation tools are cloud-based, on-premise, or containerized software solutions that can be used to annotate production-grade training data for machine learning. This process also can be called labeling, tagging, transcribing, or processing. Text annotation tools can be available via open source or freeware, or they may be offered commercially.

Is there a list of video annotation tools?

Dataloop, Encord, Hasty, Labelbox, and Segments.ai offer commercial annotation tools that can be used to label video to train, test, and validate machine learning algorithms. CVAT is an open source video annotation tool you can use or customize for your own video annotation needs. The best video annotation tool will depend on your use case, data workforce, size and stage of your organization, and quality requirements.

What’s the best text annotation tool?

The best text annotation tool will depend on your use case, data workforce, size and stage of your organization, and quality requirements. DatasaurAI and Labelbox offer commercial annotation tools that can be used to analyze language and sentiment to train, test, and validate machine learning algorithms.