Machine learning models depend on data. Without a foundation of high-quality training data, even the most performant algorithms can be rendered useless. Indeed, robust machine learning models can be crippled when they are trained on inadequate, inaccurate, or irrelevant data in the early stages. When it comes to training data for machine learning, a longstanding premise remains painfully true: garbage in, garbage out.

Accordingly, no element is more essential in machine learning than quality training data. Training data refers to the initial dataset used to develop a machine learning model, from which the model creates and refines its rules. The quality of this data profoundly impacts the model's performance, setting a powerful precedent for all future applications that rely on the same training dataset. Ensuring high-quality training data is crucial for accurate predictions and model accuracy in machine learning projects.

Read the full guide below, or download a PDF version of the guide you can reference later.

If training data is a crucial aspect of any machine learning model, how can you ensure that your algorithm is absorbing high-quality datasets? For many project teams, the work involved in acquiring, labeling, and preparing training data is incredibly daunting. Sometimes, they compromise on the quantity or quality of training data – a choice that leads to significant problems later.

Don’t fall prey to this common pitfall. With the right combination of people, processes, and technology, you can transform your data operations to consistently produce high-quality training data. Achieving this requires seamless coordination between your human workforce, machine learning project teams, and labeling tools to ensure accurate training data and reliable machine learning models.

In this guide to training data, we’ll cover how to create the quality training data inputs your model craves. First, we’ll explore the idea of training data in more detail, introducing you to a number of related terms and concepts. From there, we’ll discuss the people, technology, and processes involved in developing first-rate training data.

We’ll also explore the challenges of cleaning and filtering training data, collaborating with teams, and using labeling tools to produce large volumes of high-quality data. Our guide will highlight the most productive approaches to these tasks, emphasizing the importance of effective management, feedback, and communication. As you’ll discover, creating powerful machine learning models often depends on the expertise and reliability of your human workforce in ensuring accurate training datasets.

Introduction:

Will this guide be helpful to me?

This guide will be helpful to you if you're using supervised learning and:

- You want to improve the quality of training data for your machine learning models; or

- You are ready to scale your team’s training data operations, and you want to maintain or improve the quality of your training data.

The Basics:

Training Data and Machine Learning

What is training data?

In machine learning, training data is the data you use to train a machine learning algorithm or model. Training data requires some human involvement to analyze or process the data for machine learning use. How people are involved depends on the type of machine learning algorithms you are using and the type of problem that they are intended to solve.

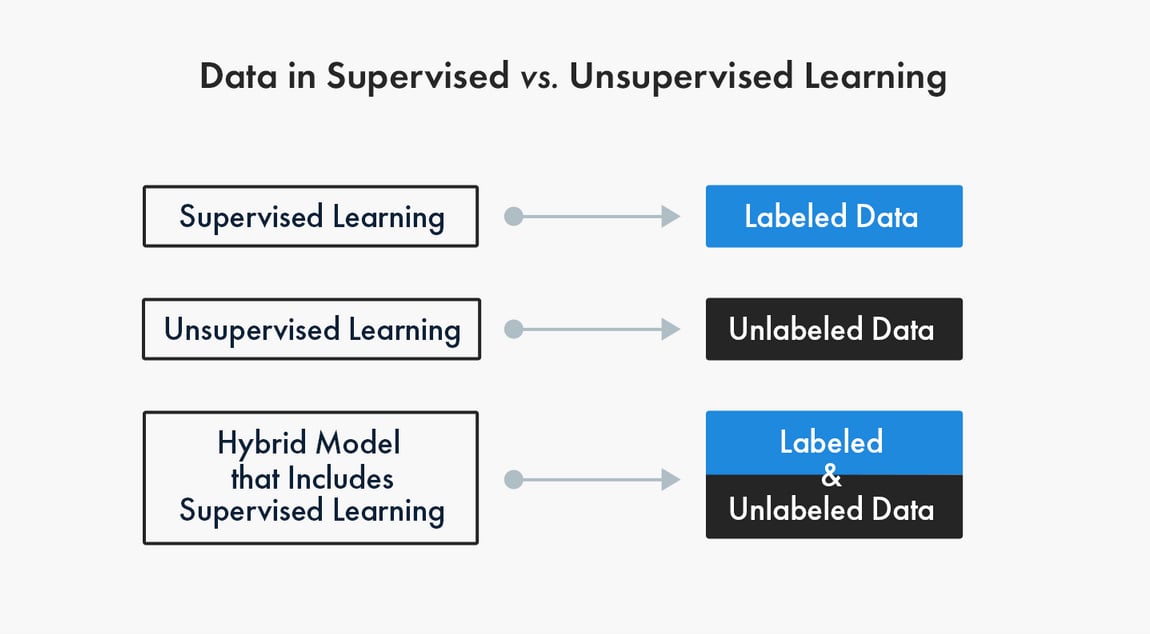

- With supervised learning, people are involved in choosing the data features to be used for the model. Training data must be labeled - that is, enriched or annotated - to teach the machine how to recognize the outcomes your model is designed to detect.

- Unsupervised learning uses unlabeled data to find patterns in the data, such as inferences or clustering of data points. There are hybrid machine learning models that allow you to use a combination of supervised and unsupervised learning.

Training data comes in many forms, reflecting the myriad potential applications of machine learning algorithms. Training datasets can include text (words and numbers), images, video, or audio. And they can be available to you in many formats, such as a spreadsheet, PDF, HTML, or JSON. When labeled appropriately, your data can serve as ground truth for developing an evolving, performant machine-learning formula.

What is labeled data?

Labeled data is annotated to show the target, which is the outcome you want your machine learning model to predict. Data labeling is sometimes called data tagging, annotation, moderation, transcription, or processing. The process of data labeling involves marking a dataset with key features that will help train your algorithm. Labeled data explicitly calls out features that you have selected to identify in the data, and that pattern trains the algorithm to discern the same pattern in unlabeled data.

Take, for example, you are using supervised learning to train a machine learning model to review incoming customer emails and send them to the appropriate department for resolution. One outcome for your model could involve sentiment analysis - or identifying language that could indicate a customer has a complaint, so you could decide to label every instance of the words “problem” or “issue” within each email in your dataset.

That, along with other data features identified during data labeling and model testing, could help train the machine learning model to accurately predict which emails to escalate to a service recovery team, improving model performance.

The way data labelers score, or assign weight, to each label and how they manage edge cases also affects the accuracy of your model. You may need to find labelers with domain expertise relevant to your use case. As you can imagine, the quality of the data labeling for your training data can determine the performance of your machine learning model.

Enter the human in the loop.

What is human in the loop?

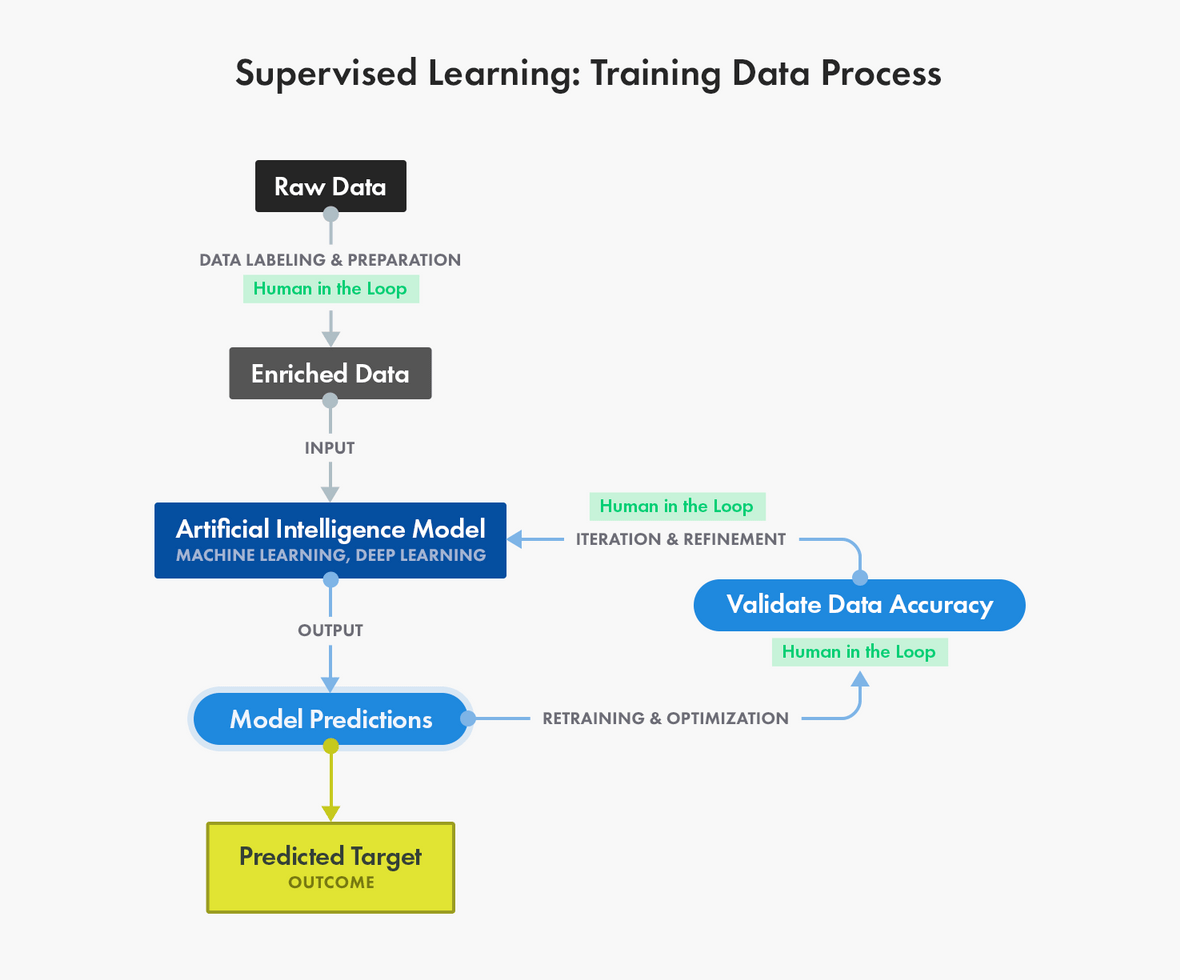

“Human in the loop” applies the judgment of people who work with the data that is used with a machine learning model. When it comes to data labeling, the humans in the loop are the people who gather the data and prepare it for use in machine learning.

Gathering the data includes getting access to the raw data and choosing the important attributes of the data that would be good indicators of the outcome you want your machine learning model to predict.

This is an important step because the quality and quantity of data that you gather will determine how good your predictive model could be. Preparing the data means loading it into a suitable place and getting it ready to be used in machine learning training.

Consider datasets that include point-cloud data from lidar-derived images that must be labeled to train machine learning models that operate autonomous vehicle (AV) systems. People use advanced digital tools, such as 3-D cuboid annotation software, to annotate features within that data, such as the occurrence, location, and size of every stop sign in a single image.

This is not a one-and-done approach, because with every test, you will uncover new opportunities to improve your model. The people who work with your data play a critical role in the quality of your training data. Every incorrect label can have an effect on your model’s performance.

How is training data used in machine learning?

Unlike other kinds of algorithms, which are governed by pre-established parameters that provide a sort of “recipe,” machine learning algorithms improve through exposure to pertinent examples in your training data.

The features in your training data and the quality of labeled training data will determine how accurately the machine learns to identify the outcome, or the answer you want your machine learning model to predict.

For example, you could train an algorithm intended to identify suspicious credit card charges with cardholder transaction data that is accurately labeled for the data features, or attributes, you decide are key indicators for fraud.

The quality and quantity of your training data determine the accuracy and performance of your machine learning model. If you trained your model using training data from 100 transactions, its performance likely would pale in comparison to that of a model trained on data from 10,000 transactions. When it comes to the diversity and volume of training data, more is usually better – provided the data is properly labeled.

"As data scientists, our time is best spent fitting models. So we appreciate it when the data is well structured, labeled with high quality, and ready to be analyzed,” says Lander Analytics Founder and Chief Data Scientist Jared P. Lander. His full-service consulting firm helps organizations leverage data science to solve real-world challenges.

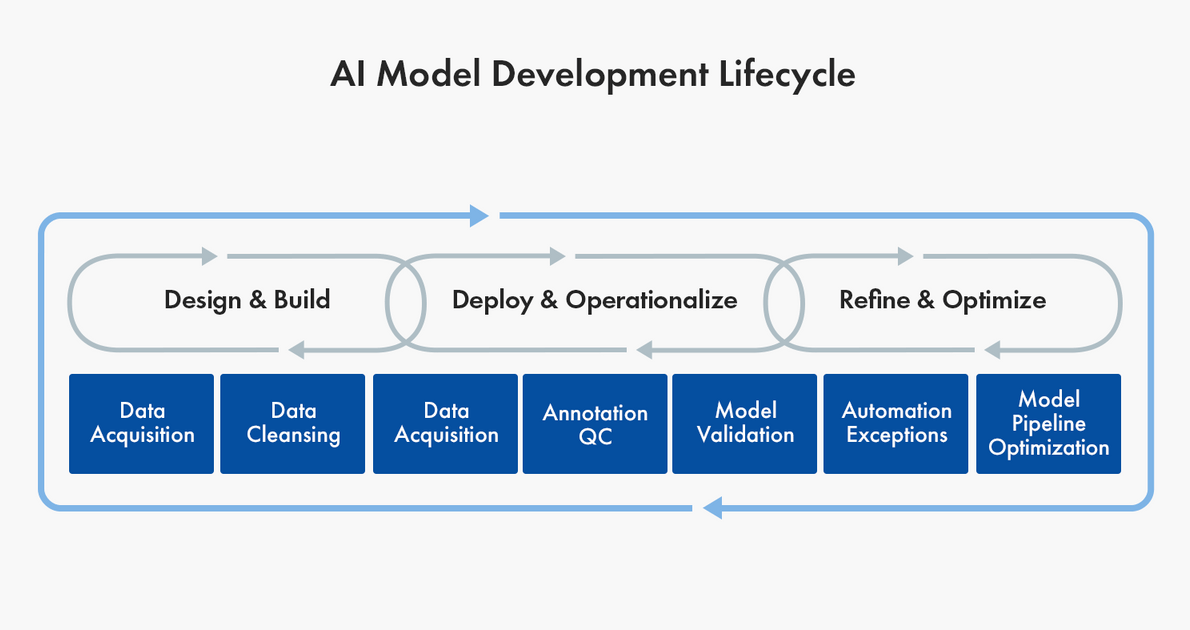

Training data is used not only to train but to retrain your model throughout the AI development lifecycle. Training data is not static: as real-world conditions evolve, your initial training dataset may be less accurate in its representation of ground truth as time goes on, requiring you to update your training data to reflect those changes and retrain your model.

What is the difference between training data and testing data?

It’s important to differentiate between training and testing data, though both are integral to improving and validating machine learning models. Whereas training data “teaches” an algorithm to recognize patterns in a dataset, testing data is used to assess the model’s accuracy.

More specifically, training data is the dataset you use to train your algorithm or model so it can accurately predict your outcome. Validation data is used to assess and inform your choice of algorithm and parameters of the model you are building. Test data is used to measure the accuracy and efficiency of the algorithm used to train the machine - to see how well it can predict new answers based on its training.

Take, for example, a machine learning model intended to determine whether or not a human being is pictured in an image. In this case, training data would include images, tagged to indicate the photo includes the presence or absence of a person. After feeding your model this training data, you would then unleash it on unlabeled test data, including images with and without people. The algorithm’s performance on test data would then validate your training approach – or indicate a need for more or different training data.

How can I get training data?

You can use your own data and label it yourself, whether you use an in-house team, crowdsourcing, or a data labeling service to do the work for you. You also can purchase training data that is labeled for the data features you determine are relevant to the machine learning model you are developing.

Auto-labeling features in commercial tools can help speed up your team, but they are not consistently accurate enough to handle production data pipelines without human review. Hasty, Dataloop, and V7 Labs have auto-labeling features in their enrichment tools.

Your machine learning use case and goals will dictate the kind of data you need and where you can get it. If you are using natural language processing (NLP) to teach a machine to read, understand, and derive meaning from language, you will need a significant amount of text or audio data to train your algorithm.

You would need a different kind of training data if you are working on a computer vision project to teach a machine to recognize or gain understanding of objects that can be seen with the human eye. In this case, you would need labeled images or videos to train your machine learning model to “see” for itself.

There are many sources that provide open datasets, such as Google, Kaggle and Data.gov. Many of these open datasets are maintained by enterprise companies, government agencies, or academic institutions.

How much training data do I need?

There’s no clear answer - no magical mathematical equation to answer this question - but more data is better. The amount of training data you need to create a machine learning model depends on the complexity of both the problem you seek to solve and the algorithm you develop to do it. One way to discover how much training data you will need is to build your model with the data you have and see how it performs.

Winning The Race to Quality Data

Quality training data is vital when you are creating reliable algorithms. According to research by analyst firm Cognilytica, more than 80% of artificial intelligence (AI) project time is spent on data preparation and engineering tasks.

Doing the work in-house can be costly and time-consuming, especially when ensuring high-quality training data. Outsourcing the work can be challenging, as there’s often little communication with the people working with your machine learning data, which can lead to lower quality. Crowdsourcing can be more expensive, as it uses consensus to measure quality, requiring multiple workers to complete the same task. The correct answer is the one that comes back from the majority of workers.

When you are labeling data for machine learning, winning the race to quality data requires a strategic combination of people, process, and tools.

What affects training data quality?

There are three main factors that can help predict the quality of training data you can expect from those working with your machine learning data, whether your team is in-house, crowdsourced, or outsourced.

- People: The selection, development, and management of workers

- Process: How workers do the work - from onboarding to task instructions to quality control workflows

- Tools: The technology to access the work, manage workers, and maximize quality and throughput

1) People

Quality starts with the people involved in the process. The experience and training provided to workers directly impact the quality of training data they produce. Worker assessment and selection offer the first opportunity to improve data collection and ensure high-quality datasets. By conducting skill assessments, you can ensure workers meet the minimum acceptable standards, which are essential for improving model performance and reducing overfitting during the training process.

Regular training is important. Depending on the difficulty and complexity of a task, customized training may be required to ensure the continued skill development of the data worker, which results in higher quality work. For very simple yes-or-no tasks, minimal training may be enough to deliver sufficient quality levels. However, for tasks with a range of complexity, nuance, or subjectivity, higher-level training programs may be required to train workers quickly while ensuring quality results.

This is where crowdsourcing can fall short, as workers can change from day to day - so you aren’t able to capture the value of the domain knowledge they gain from working with your data.

2) Process

The best process for labeling quality training data is built for scale, with tight quality controls and clear parameters for task precision. Communication and collaboration are important because with machine learning, you will want the ability to quickly iterate the work to meet evolving business goals.

If you’ve already been doing the work in-house, you want to make sure that a potential data labeling partner is willing and able to take your pre-existing processes, review them for best practices, and if necessary, modify them to work for their workforce. If you’re just getting started or want to update your process, it’s important to find a partner that can help you design a process from scratch. Ideally, you’d find a partner that can do both.

You will need to allow room for iteration along the way; in fact, it is a vital best practice. To iterate quickly requires direct communication with the people who work with your data and quick, clear feedback from them so you can make rapid improvements in your process.

3) Tools

Like any project or task, without the proper tools, you simply can’t do a good job. If you’re stuck with outdated or incompatible technologies, productivity decreases and quality suffers. Poor-fit tooling can dramatically slow down and even stop the most innovative projects - or make the process more costly. Implementing effective tools can improve outcomes, increase speed, and reduce project costs.

In an example of a simple tool enhancement to improve efficiency, one CloudFactory client increased throughput 3x by embedding a Google Maps view into its tooling screen, rather than requiring data labelers to open Google Maps in a separate browser tab.

The goal with tooling is flexibility. If you are using a partner to gather and prepare your training data, avoid tying your workforce to your tool. Be sure to consider how important it will be to make changes in tooling features, whether you want to own the tool as intellectual property, or whether the tool will be able to keep up as your tasks and use cases progress.

Scaling Quality Training Data

If machine learning models are only as good as their training data, and great training data requires the right humans in the loop, we find ourselves at an ironic conclusion: Machine learning success depends on your human workforce.

How do you develop a large enough team that has expertise in your use case and is agile enough to evolve your process along the way? How can you keep costs down while maintaining high quality? You might need a training data labeling service.

Why is a managed team better than crowdsourcing?

Many organizations crowdsource the development of their training data, entrusting this crucial work to hundreds or thousands of anonymous workers. This approach can be problematic because you will shoulder the management burden, and you may not have access to gather feedback from the team or provide it to them, which can negatively impact data quality and the effectiveness of your machine learning models.

At CloudFactory, we outsmarted outsourcing and crowdsourcing by introducing a managed-team approach to data processing, preparation, and enrichment. A managed team provides the agility you need to make changes, the flexibility to scale your team up or down, and technology that puts you in direct communication with your data workers via a leader who works alongside the team. In essence, a CloudFactory team is an extension of your own.

Research shows the managed team approach results in higher quality. A study by data science tech developer Hivemind showed managed teams were more effective in data labeling than crowdsourced workers, and they also worked faster. In the study, the managed team provided higher quality work and was only slightly more expensive than the crowdsourced workers.

The managed team approach is an effective way to scale your training data operations. Your training data is too essential to throw it to an anonymous crowd. When you need humans in the loop, it’s important to know they can produce high quality work.

Questions for a Data Labeling Partner About Quality

Here are questions you can ask data labeling providers to assess their ability to deliver the quality training data you want:

- Do you offer dedicated success managers and project managers? How will our team communicate with your data labeling team?

- How do you screen and select your workforce? Will we work with the same data labelers over time? If workers change, who trains new team members? Describe how you transfer context and domain expertise as team members transition on and off the data labeling team.

- Is your data labeling process flexible? How will you manage changes or iterations from our team that affect data features for labeling?

- How do you manage quality assurance? How do you share quality metrics with our team? What happens when quality measures aren’t met? How involved in quality control (QC) will my team need to be?

Quality Data = Better Outcomes:

The CloudFactory Advantage

We understand the essential role that people play in the iterative development of machine learning models. We work hard - so you don’t have to. Our people, processes, tools, and team model work together to deliver the high quality work you would do yourself - if you had the time.

We screen our workers for character and skills, and we make investments in their professional and personal development. Our teams are actively supported and managed, allowing for accountability, oversight, and maximum efficiency - all in the service of your business rules and goals.

We are tool-agnostic, so we can share our experience with available tools, offer you access to our best-of-breed industry partners, or use tools you develop and maintain yourself. We can improve the quantity and quality of your training data, no matter how you are getting the work done today.

Our expertise has helped companies across diverse industries conquer their training data challenges.

Are you ready to get the high quality training data you want for your machine learning model? Find out what we can do for you.

Contributors

Jared P. Lander (Chief Data Scientist) and Michael Beigelmacher (Data Engineer) of Lander Analytics reviewed and provided comments on this guide in February 2020. Lander Analytics is a full-service consulting firm that helps organizations leverage data science to solve real-world challenges.

Contact Sales

Whether you have questions about training data or want to learn how CloudFactory can help lighten your team’s load, we’re happy to help.

Frequently Asked Questions

What is training data and test data?

Training data is the data you use to train an algorithm or machine learning model to predict the outcome you design your model to predict. If you are using supervised learning or some hybrid that includes that approach, your data will be enriched with data labeling or annotation. Test data is used to measure the performance, such as accuracy or efficiency, of the algorithm you are using to train the machine. Test data will help you see how well your model can predict new answers, based on its training. Both training and test data are important for improving and validating machine learning models.

How do you label training data?

Data labeling requires a strategic combination of people, process, and technology. It requires a human-in-the-loop approach, where people use advanced software tools to label features in the data, which creates a dataset that can be used to train a machine learning model. There are different approaches for labeling training data. You can hire in-house staff, use a crowdsourced workforce, or hire a managed data labeling team. In general, managed teams deliver high quality, while crowdsourcing can provide fast access to anonymous workers.

What is training data in machine learning?

Training data is the data you use to train a machine learning algorithm or model to accurately predict a particular outcome, or answer, that you want your model to predict. In supervised learning, training data requires a human in the loop to choose and label the features in the data that will be used to train the machine. Unsupervised learning uses unlabeled data to find patterns, such as inferences or clustering of data points. Semi-supervised learning includes a combination of supervised and unsupervised learning.

What is AI training data?

AI training data is used to train, test, and validate models that use machine learning and deep learning. In supervised learning, training data is enriched (labeled, tagged, or annotated) to call out features in the data that are used to teach the machine how to recognize the outcomes, or answers, your model is designed to detect. Unsupervised learning uses unlabeled data.

How much training data do you need?

There’s no easy answer but more training data is usually better. The quality, quantity, and diversity of your training data will determine the accuracy and performance of your machine learning model. The more data you have, and the more diverse it is to reflect real-world conditions, the better your machine learning model will perform. The accuracy of the labels in your training data is also important and can affect your model’s performance.

What are the best AI training data companies?

The best AI training data companies can provide or enrich the high-quality data you need to train, test, and validate your machine learning models. A training data labeling service should be able to:

- Provide high-quality data enrichment,

- Adapt to changing use cases and complex tasks,

- Be in direct communication with your data operations team to ease iterative improvements in data enrichment,

- Safeguard your data with appropriate security measures, and

- Offer a pricing model that gives you the flexibility to change your tasks in scale and complexity as you evolve your process.

Where can I find AI training data sets?

Many enterprise companies, government agencies, and academic institutions provide open datasets, including Google, Kaggle, and Data.gov. They use their resources to collect and maintain these datasets, and some of them are labeled for use as AI training data with supervised or semi-supervised learning. Of course, you can use your own data and label it for AI use. You also can hire data labeling providers, crowdsourced teams, or in-house employees to enrich your data.

How can I create training data for machine learning?

If you are using supervised or semi-supervised learning, you can use your own data and label it yourself or hire a data labeling provider to label it for you. You also can purchase training data that is accurately labeled for the data features you have decided are relevant to the machine learning model you are developing. Some enterprise companies, government agencies, and academic institutions provide open datasets that can be used for machine learning. However, not all of those datasets are enriched for machine learning use.

How can I prepare training data for neural network?

A neural network is a set of algorithms that are designed to recognize patterns using unlabeled data. Deep learning models are built using neural networks. You can prepare your training data using tools, such as Keras, which is a user-friendly neural network library written in Python. The datasets you use must be clean, or preprocessing must be complete, before your data will be ready for modeling. If your data has missing values, for example, you may want to preprocess your data to ensure your deep learning model yields accurate results.

How do you train data?

In machine learning, you don’t train the data. Rather, you use training data to train, test, and validate your machine learning models. In supervised and semi-supervised learning, training data is enriched. That is, people use advanced software tools to label or annotate the data to call out features that will help teach the machine how to predict the outcome, or the answer, you want your model to predict. With unsupervised learning, training data is not labeled.