The images you use to train, validate, and test your computer vision models will have a significant effect on the success of your artificial intelligence project. Each image in your training data must be thoughtfully and accurately labeled to train an AI system to recognize objects, similar to how a human can. The higher the quality of your annotations, the better your object detection and machine learning models are likely to perform.

While the volume and variety of your image data are likely growing every day, getting images annotated according to your specifications can be a challenge that slows your project and, as a result, your speed to market. The choices you make about your annotation tasks, techniques, tools, and workforce are worth thoughtful consideration. Annotation projects should be planned carefully to ensure efficiency and scalability, especially if you're considering automation to speed up the process.

We’ve created this guide to be a handy reference about image annotation. Feel free to bookmark and revisit this page if you find it helpful.

Read the full guide below, or download a PDF version of the guide you can reference later.

In this guide, we’ll cover image annotation for computer vision using supervised learning.

First, we’ll explain image annotation in greater detail, introducing you to key points, terms, and concepts. Next, we’ll explore how image annotation is used for machine learning and some of the techniques available for annotating visual data, including images and videos. We'll also touch on how annotation tasks are essential for building quality training data to improve learning algorithms.

Finally, we’ll share why decisions about your workforce are an important success factor for any computer vision model project. We’ll give you considerations for selecting the right workforce, and you’ll get a short list of critical questions to ask a potential image annotation service provider to ensure they can handle annotation projects and scale for real-time needs.

Introduction:

Will this guide be helpful to me?

This guide will be helpful to you if:

- You have visual data (i.e., images, videos) from imaging technology that you want to prepare for use in training data to improve machine learning or deep learning models.

- You have annotated visual data, but it does not meet your project’s quality requirements for tasks such as object detection or image segmentation.

- You want to learn how you can use visual data to train high-performance computer vision models and enhance learning algorithms.

- You are looking to explore strategies for annotation projects, ensuring the annotated data meets your needs, and considering automation to streamline the process for real-time application.

The Basics:

Image Annotation for Machine Learning

What is image annotation?

In machine learning and deep learning, image annotation is the process of labeling or classifying an image using text, annotation tools, or both, to show the data features you want your model to recognize on its own. When you annotate an image, you are adding metadata to a dataset.

Image annotation is a type of data labeling that is sometimes called tagging, transcribing, or processing. You also can annotate videos continuously, as a stream, or frame by frame.

Image annotation marks the features you want your machine learning system to recognize, and you can use the images to train your model using supervised learning. Once your model is deployed, you want it to be able to identify those features in images that have not been annotated and, as a result, make a decision or take some action.

Image annotation is most commonly used to recognize objects and boundaries and to segment images for object detection, meaning, or whole-image understanding. For each of these uses, it takes a significant amount of data to train, validate, and test computer vision models to achieve the desired outcome.

Simple vs. Complex Image Annotation:

- Simple image annotation may involve labeling an image with a phrase that describes the objects pictured in it. For example, you might annotate an image of a cat with the label “domestic house cat.” This is also called image classification, or tagging, and is a fundamental part of annotation tasks in artificial intelligence projects.

-

Complex image annotation can be used to identify, count, or track multiple objects or areas in an image. For example, you might annotate the difference between breeds of cat: perhaps you are training a model to recognize the difference between a Maine Coon cat and a Siamese cat. Both are unique and can be labeled as such. The complexity of your annotation will vary, based on the complexity of your annotation projects. More advanced tasks like this are crucial in creating high-quality annotated data for learning algorithms.

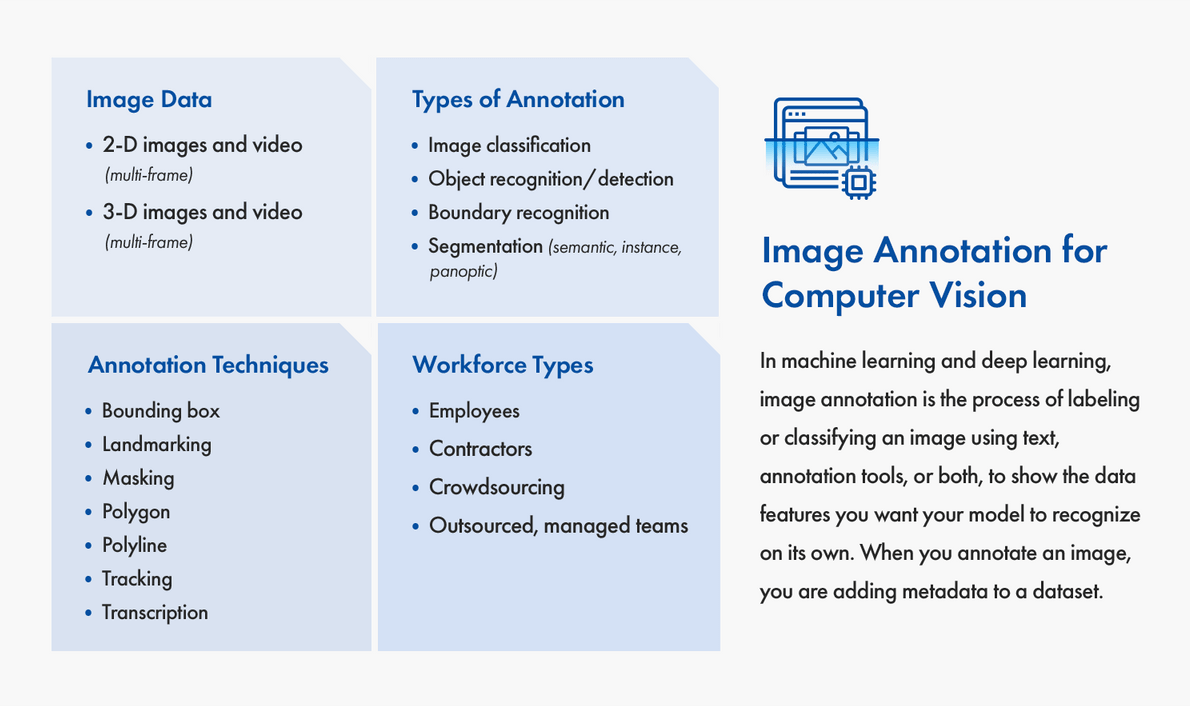

This image is an overview of the data types, annotation types, annotation techniques, and workforce types used in image annotation for computer vision.

What kind of images can be annotated for machine learning?

Image and video annotation are used to prepare visual data for machine learning. Videos can be annotated in a continuous stream or on a frame-by-frame basis.

These are the most common types of data used with image and video annotation:

- 2-D images and video (multi-frame), including data from cameras or other imaging technology, such as a SLR (single lens reflex) camera or an optical microscope

-

3-D images and video (multi-frame), including data from cameras or other imaging technology, such as electron, ion, or scanning probe microscopes

How are images annotated?

You can annotate images using commercially-available, open source, or freeware data annotation tools. If you are working with a lot of data, you also will need a trained workforce to annotate the images. Tools provide feature sets with various combinations of capabilities, which can be used by your workforce to annotate images, multi-frame images, or video, which can be annotated as stream or frame by frame.

Are there image annotation services?

Yes; there are image annotation services. If you are doing image annotation in-house or using contractors, there are services that can provide crowdsourced or professionally-managed team solutions to assist with scaling your annotation process. We’ll address this area in more detail later in this guide.

Types of Image Annotation

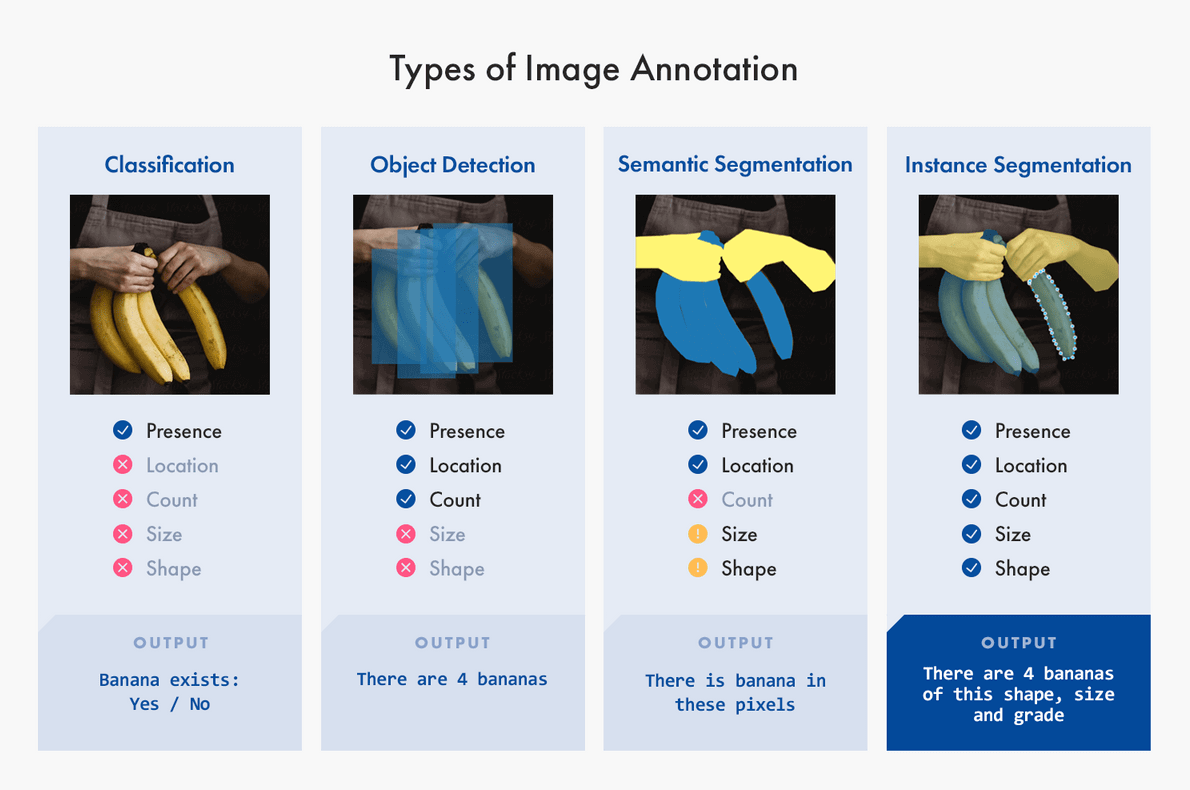

There are four primary types of image annotation you can use to train your computer vision AI model.

Each type of image annotation is distinct in how it reveals particular features or areas within the image. You can determine which type to use based on the data you want your algorithms to consider.

1. Image Classification

Image classification is a form of image annotation that seeks to identify the presence of similar objects depicted in images across an entire dataset. It is used to train a machine to recognize an object in an unlabeled image that looks like an object in other labeled images that you used to train the machine. Preparing images for image classification is sometimes referred to as tagging.

Classification applies across an entire image at a high level. For example, an annotator could tag interior images of a home with labels such as “kitchen” or “living room.” Or, an annotator could tag images of the outdoors with labels such as “day” or “night.”

2. Object Recognition/Detection

Object recognition is a form of image annotation that seeks to identify the presence, location, and number of one or more objects in an image and label them accurately. It also can be used to identify a single object By repeating this process with different images, you can train a machine learning model to identify the objects in unlabeled images on its own.

You can label different objects within a single image with object recognition-compatible techniques, such as bounding boxes or polygons. For instance, you may have images of street scenes, and you want to label trucks, cars, bikes, and pedestrians. You could annotate each of these separately in the same image.

A more complex example of object recognition is medical imagery, such as CT (Computed Tomography) or MRI (Magnetic Resonance Imaging) scans. This kind of data is multi-frame, so you can annotate it continuously, as a stream, or by frame to train a machine to identify features in the data, such as indicators of breast cancer. You also can track how those features change over a period of time.

3. Segmentation

A more advanced application of image annotation is segmentation. This method can be used in many ways to analyze the visual content in images to determine how objects within an image are the same or different. It also can be used to identify differences over time.

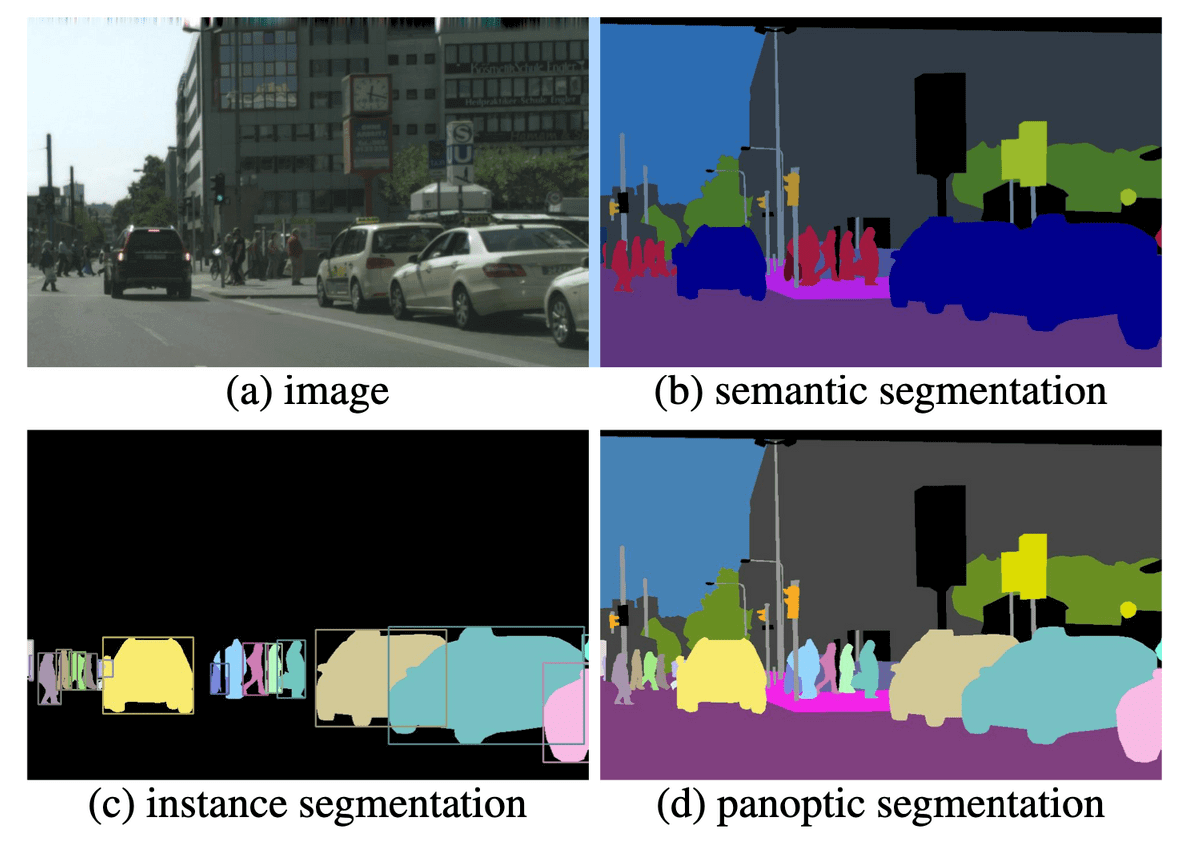

There are three types of segmentation:

a) Semantic segmentation delineates boundaries between similar objects and labels them under the same identification. This method is used when you want to understand the presence, location, and sometimes, the size and shape of objects.

You would use semantic segmentation when you want objects to be grouped, and it is typically reserved for objects you don’t need to count or track across multiple images, because the annotation may not reveal size or shape. For example, if you were annotating images that included both the stadium crowd and the playing field at a baseball game, you could annotate the crowd to segment the seating from the field.

b) Instance segmentation tracks and counts the presence, location, count, size, and shape of objects in an image. This type of image annotation is also referred to as object class. Using the same example of images of a baseball game, you could label each individual in the stadium and use instance segmentation to determine how many people were in the crowd.

You can perform either semantic or instance as pixel-wise segmentation, which means every pixel inside the outline is labeled. You can also perform them with boundary segmentation, where only the border coordinates are counted.

c) Panoptic segmentation blends semantic and instance segmentation to provide data that is labeled for both background (semantic) and object (instance). For example, panoptic segmentation can be used with satellite imagery to detect changes in protected conservation areas. This kind of image annotation can assist scientists who are tracking changes in tree growth and health to determine how events, such as construction or a forest fire, have affected the area.

In this series of photos (a) is the original image, and the others show three kinds of segmentation that can be applied in image annotation. In this example, the objects of interest are the cars and the people. Photo credit: Panoptic Segmentation, CVPR 2019

4. Boundary Recognition

Image annotation can be used to train a machine to recognize lines or boundaries of objects in an image. Boundaries can include the edges of an individual object, areas of topography shown in an image, or man-made boundaries that are present in the image. Annotated appropriately, images can be used to train a machine to recognize similar patterns in unlabeled images.

Boundary recognition can be used to train a machine to identify lines and splines, including traffic lanes, land boundaries, or sidewalks. Boundary recognition is particularly important for safe operation of autonomous vehicles. For example, the machine learning models used to program drones must teach them to follow a particular course and avoid potential obstacles, such as power lines.

It also can be used to train a machine to identify foreground from background in an image, or exclusion zones. For example, if you have images of a grocery store and you want to focus on the stocked shelves, rather than the shopping lanes, you can exclude the lanes from the data you want algorithms to consider. Boundary recognition is also used in medical images, where annotators can label the boundaries of cells within an image to detect abnormalities.

How do you do image annotation?

To apply annotations to your image data, you will use a data annotation tool. The availability of data annotation tools for image annotation use cases is growing fast. Some tools are commercially available, while others are available via open source or freeware. In most cases, you will have to customize and maintain an open source tool yourself; however, there are tool providers that host open source tools.

If your project and resources allow it, you may wish to build your own image annotation tool. This is generally the choice when existing tools don’t meet your requirements or when you want to build into your tool features that you value as intellectual property (IP). If you choose this route, be sure that you have the people and resources to maintain, update, and make improvements to the tool over time.

There are many excellent tools available today for image annotation. Some tools are narrowly optimized to focus on specific types of labeling, while others offer a broad mix of capabilities to enable many different kinds of use cases. Making the choice between a specialized tool or one with a wider set of features or functionality will depend on your current and anticipated image annotation needs. Keep in mind that there is no tool that can do it all, so you’ll want to choose a tool that you can grow into as your requirements change.

Image Annotation Techniques

Image annotation involves one or more of these techniques, which are supported by your data annotation tool, depending on its feature sets.

Bounding Box Annotation Technique

These are used to draw a box around the target object, especially when objects are relatively symmetrical, such as vehicles, pedestrians, and road signs. It also is used when the shape of the object is of less interest or when occlusion is less of an issue. Bounding boxes can be two-dimensional (2-D) or three-dimensional (3-D). A 3-D bounding box is also called a cuboid.

This is an example of image annotation using a bounding box. The dog is the object of interest.

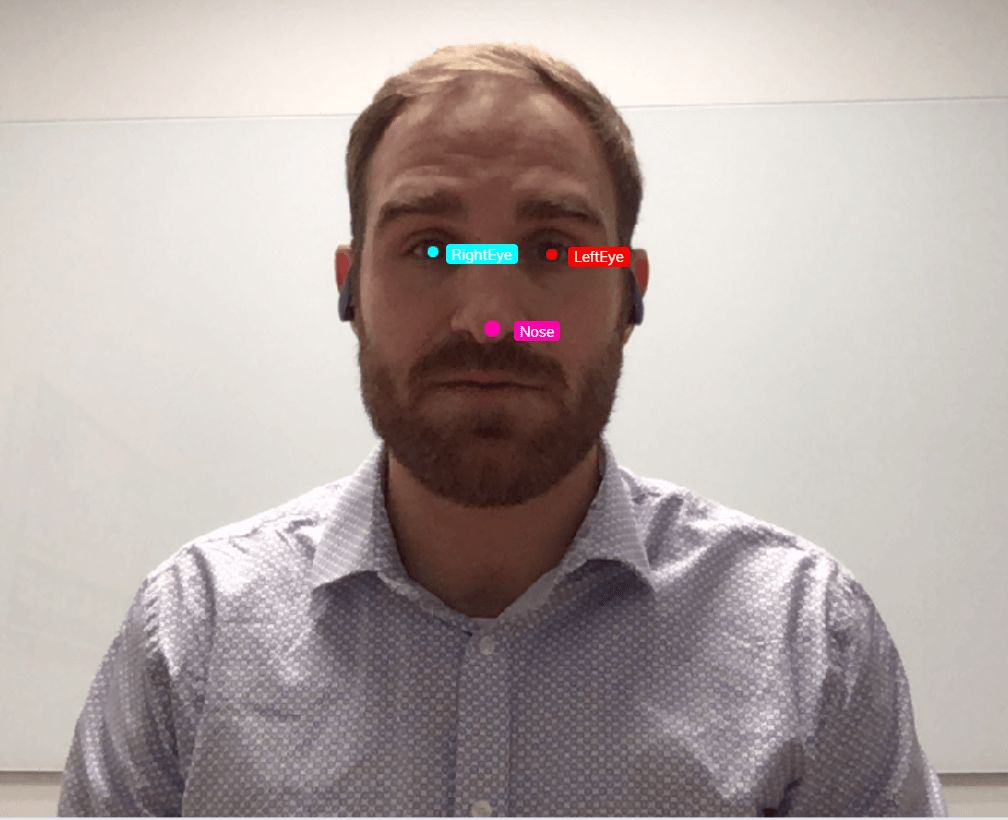

Landmarking Annotation Technique

This is used to plot characteristics in the data, such as with facial recognition to detect facial features, expressions, and emotions. It also used to annotate body position and alignment, using pose-point annotations. In annotating images for sports analytics, for example, you can determine where a baseball pitcher’s hand, wrist, and elbow are in relation to one another while the pitcher throws the baseball.

This is an example of image annotation using landmarking. The eyes and nose are the features of interest.

Masking Annotation Technique

This is pixel-level annotation that is used to hide areas in an image and to reveal other areas of interest. Image masking can make it easier to hone in on certain areas of the image.

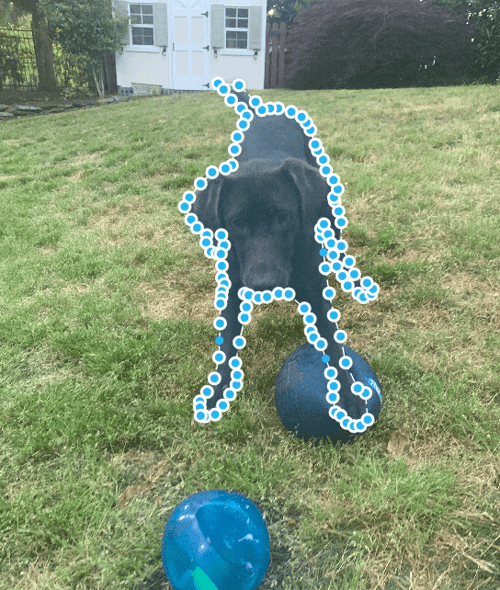

Polygon Annotation Technique

This is used to mark each of the highest points (vertices) of the target object and annotate its edges: These are used when objects are more irregular in shape, such as houses, areas of land, or vegetation.

This is an example of image annotation using a polygon. The dog is the object of interest.

Polyline Annotation Technique

This plots continuous lines made of one or more line segments: These are used when working with open shapes, such as road lane markers, sidewalks, or power lines.

This is an example of image annotation using a polyline. The street’s lane line is the object of interest.

Tracking Annotation Technique

This is used to label and plot an object’s movement across multiple frames of video. Some image annotation tools have features that include interpolation, which allows an annotator to label one frame, then skip to a later frame, moving the annotation to the new position, where it was later in time. Interpolation fills in the movement and tracks, or interpolates, the object’s movement in the interim frames that were not annotated.

This is an example of image annotation using tracking. The car is the object of interest, spanning multiple frames of video.

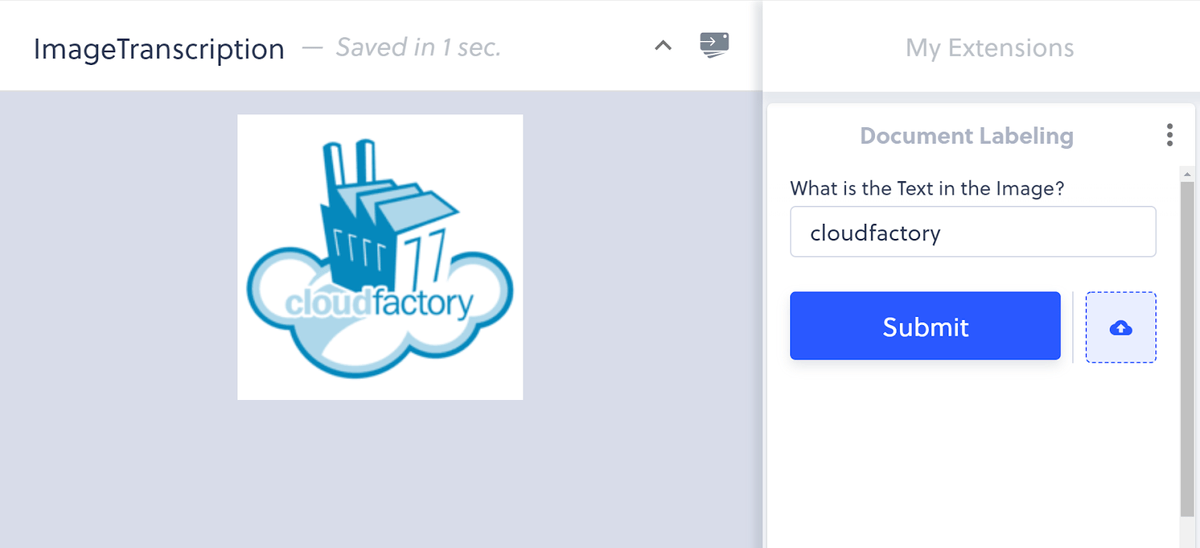

Transcription Annotation Technique

This is used to annotate text in images or video when there is multimodal information (i.e., images and text) in the data.

This is a screenshot of an annotator’s view while labeling an image using transcription. The text in the image is the object of interest.

Your Workforce Strategy for Image Annotation

How are companies doing image annotation?

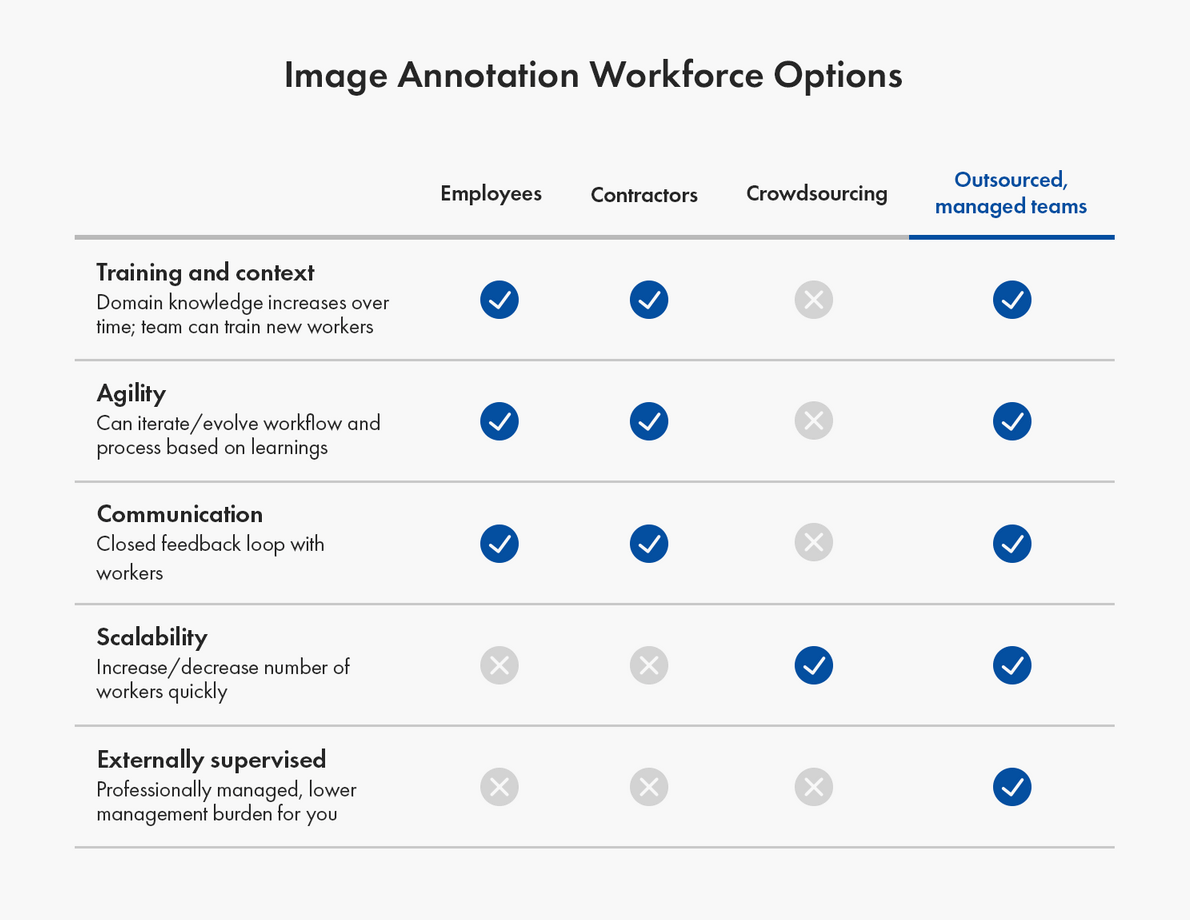

Organizations use a combination of software, processes, and people to gather, clean, and annotate images. In general, you have four options for your image annotation workforce. In each case, quality depends on how workers are managed and how quality is measured and tracked.

- Employees: These are individuals on your payroll, full-time or part-time. This option allows you to build in-house expertise and, typically, respond quickly to change. However, often those tasked with annotation were not hired to do annotation. It becomes an addition to their original job description, which means your employees are distracted from the reason you hired them in the first place. Additionally, scaling an internal team can be a challenge, as you bear the responsibility and expense of hiring, managing and training workers - as well as ensuring low churn.

- Contractors: These are temporary or freelance workers who you train to do the work. Their domain knowledge of your use case can increase over time, and they have the agility to incorporate changes quickly. With contractors, you often have the flexibility to scale your team up or down as needed. However, as with employees, you will bear the responsibility of management burdens and ensuring low worker churn.

- Crowdsourcing: This is an anonymous, ad hoc source of labor. You use a third-party platform to access large numbers of freelance workers at once, and typically users of the platform volunteer to do the work you describe. Domain knowledge, or even annotation experience, is limited, and you are never sure who is working on your data. Quality tends to be lower with crowdsourced teams because the workers are not vetted the same way they are with in-house, contracted, or managed teams.

- Managed teams: These are an outsourcing option. Teams are strategically selected, trained, and professionally managed individuals who work on teams. You share your requirements and annotation process, and they help you to scale it. Their understanding of domain knowledge with your use case is likely to increase over time, and they are likely to have the agility to incorporate changes to your image annotation process.

The advantages of outsourced, managed teams

There are three characteristics of outsourced, professionally managed teams that make them an ideal choice for image annotation, particularly for machine learning use cases.

1. Training and context

In image annotation, basic domain knowledge and contextual understanding is essential for your workforce to annotate your data with high quality for machine learning. Managed teams of workers label data with higher quality because they can be taught the context, or setting and relevance, of your data and their knowledge will increase over time. It’s even better when more than one member of your annotation team has domain knowledge, so they can manage the team and train new members on rules and edge cases. A managed team has staying power and can retain domain knowledge, which you do not get with crowdsourcing.

2. Agility

Machine learning is an iterative process. Your workflow and rules may change as you test and validate your models and learn from their outcomes. A managed team of annotators provides the flexibility to incorporate changes in data volume, task complexity, and task duration. The more adaptive your workforce is, the more machine learning projects you can work through. The best managed teams for image annotation can provide your team with valuable insights about data features - that is, the properties, characteristics, or classifications - that will be analyzed for patterns that help predict the target, or answer what you want your model to predict.

3. Communication

Managed image annotation teams can use technology to create a closed feedback loop with you that will establish reliable communication and collaboration between your project team and annotators. Workers should be able to share what they’re learning as they work with your data, so you can use their insights to adjust your approach.

Outsourced, managed teams are an ideal choice for image annotation. Similar to employees and contractors, managed teams bring all the benefits of an in-house team without placing the burden of management on your organization. Similar to crowdsourcing, managed teams can scale your workforce up or down quickly, based on your needs.

The best image annotation teams

If you are building machine learning models, the primary reason you will need an image annotation workforce is to achieve quality image annotation at scale. Using image data to train machine learning models requires a lot of data - in fact, high-performance machine learning and deep learning models require massive amounts of data labeled with high quality. For most AI project teams, that requires a human-in-the-loop approach.

The best image annotation teams are professionally managed teams that can provide:

- Expertise in image annotation - This kind of expertise comes with experience doing the many types of annotations described above, across multiple use cases, clients, and industries. Teams with expertise have developed processes and workflow best practices. They also know which annotation tool is best for a particular task or use case. Expertise is important to scaling your process. Teams with expertise understand how to transform complex tasks into distributed workflows that support high-quality image annotation.

- Quality - Your machine learning models will only be as good as the data that trains them. The best image annotation services monitor quality and can support, augment, or lead your team’s quality-assurance efforts. Their domain knowledge and proficiency with your rules, process, and use cases improves over time, as they work with your images and learn how you want to resolve edge cases. All of these contribute to higher quality image annotation and a better performing AI model.

- Agility - One constant in AI projects is change. Tasks, workflows, and use cases change. The best services have experience with many kinds of image annotation. Their teams can work with yours to manage task iterations as everyone learns during the process, so you can make improvements that increase throughput and quality. They also can make changes quickly to your image annotation process to counteract bias or to optimize your model’s performance.

Questions to Ask Your Image Annotation Service Provider

If you need an image annotation workforce, you may be overwhelmed by the options available online. It can be challenging to evaluate image annotation services. Here are questions to keep in mind when you’re speaking with an image annotation service provider:

Expertise

- What kind of images can your workforce annotate? How long has your workforce been annotating images?

- What types of annotations does your workforce have experience with? Does your workforce have experience annotating data in my specific domain? (e.g., medical, agriculture)

- What tools can your workforce use? What if we have built our own, proprietary image annotation tool - can you use that?

- How quickly can you scale the work? What kind of experience does your team have with a project like this?

Quality

- What standard do you use to measure quality?

- What processes are in place to ensure high quality throughout the annotation process?

- How do you share quality metrics with our team? What happens when quality measures aren’t met?

- If workers change, who trains new team members? Describe how you transfer context and domain knowledge as individuals transition on or off our image annotation team.

Agility

- How will our team communicate with your data labeling team?

- How does your team handle changes to our annotations or workflow? How quickly can changes be incorporated into our process?

- Can you scale my image annotation work up or back, per our needs?

Contract Terms

- What is your pricing model (e.g., per annotation, task, hour)?

- Can we pay month to month or is it an annual contract?

- How do changes in the scale of the work, task definition, or project scope change pricing for our project? Can we revise task instructions without renegotiating our contract?

- Do I have to maintain a certain volume or throughput to retain the pricing in my contract? Do we need to renegotiate our contract or incur additional fees if throughput changes?

CloudFactory and Image Annotation

At CloudFactory, we have a decade of experience professionally managing image annotation teams for organizations around the world. To every project, we bring:

Expertise

We’ve worked on thousands of projects for hundreds of clients. We have a deep understanding of workforce training and management for image annotation. We can transform your successful process with as few as a handful or as many as thousands of remote workers. We bring a decade of experience to your project and know how to design workflows that are built for scale. We’re tool-agnostic, so we can work with any tool on the planet, even the ones you build yourself.

Quality

Our professionally managed, team approach ensures increased domain knowledge and proficiency with your rules, process, and use cases over time. We monitor quality and can add layers of quality assurance (QA) to manage exceptions. We source tools that include robust workforce management features, quality control, and quality assurance options to meet your needs.

Agility

We have experience with a wide variety of tasks and use cases, and we know how to manage workflow changes. We put you directly in contact with a team lead, who works alongside the team and communicates with you via a closed feedback loop. This allows us to ensure task iterations, problems, and new use cases are managed quickly.

Together, we make a positive change.

At CloudFactory, we are on a mission to provide work to one million people in the developing world. We offer workers training, leadership, and personal development opportunities, including participation in community service projects. These experiences grow workers’ confidence, work ethic, skills, and upward mobility. Our clients and their teams are an important part of our mission.

Are you ready to learn how you can scale your image annotation process with an experienced workforce and great-fit tools? Find out how we can help you.

Reviewers

Anthony Scalabrino, sales engineer at CloudFactory, a provider of professionally managed teams for image annotation for computer vision.

Tristan Rouillard and Alexander Wennman, who are co-founders at Hasty, an AI-powered image annotation tooling provider that offers tools for a wide variety of use cases and the flexibility to adapt the tool to support your workflow needs.

Contact Sales

Fill out this form to speak to our team about how CloudFactory can help you reach your goals.

Frequently Asked Questions

What is image annotation?

In machine learning, image annotation is the process of labeling or classifying an image using text, annotation tools, or both to show the data features you want your ML model to recognize on its own. When you annotate an image, you are adding metadata to a dataset. Image annotation is a type of data labeling that is sometimes called tagging, transcribing, or processing.

By marking the features you want your machine learning system to recognize, you can use the images to train your model using supervised learning. Once your model is deployed, you want it to be able to identify those features in images that have not been annotated and, as a result, make a decision or take some action as a result.

What is an image annotation tool?

An image annotation tool is a software solution that can be used to label production-grade image data for machine learning. While some organizations take a do-it-yourself approach and build their own tools, there are many commercially-available image annotation tools, as well as open source and freeware tools. Some tools are narrowly optimized to focus on specific types of labeling, while others offer a broad mix of capabilities to enable many different kinds of use cases. Making the choice between a specialized tool or one with a wider set of features or functionality will depend on your current and anticipated image annotation needs.

What is Amazon Mechanical Turk image annotation?

Amazon Mechanical Turk is an online platform that allows you to access crowdsourced workers to do your image annotation work. You use Amazon’s platform to submit the image annotations you need, and Amazon’s platform distributes that work to anonymous workers. Also known as Amazon mTurk, this option is best for simple one-time projects when your tasks can be easily communicated in writing once, without having additional communication with annotators, and little to no domain expertise or experience is required.

Are there image annotation services?

Yes; there are image annotation services. If you are doing image annotation in-house or using contractors, there are services that can provide crowdsourced or managed-team solutions to assist with scaling your process. The best image annotation services can provide expertise, quality work, and agility to evolve tasks and use cases.

Where can I find image annotation software?

There are many excellent software tools available for image annotation. The tool you choose will be dependent on four things:

- The kind (e.g., image, video) of visual data you are working with;

- The dimension of that data (i.e., 2-D, 3-D); and

- How you want the tool to be deployed (e.g., cloud, container, on-premise)

- The feature sets you want your tool to have (e.g., dataset management, annotation methods, workforce management, data quality control, security)

What is an annotated image?

In machine learning, an annotated image is one that has been labeled using text, annotation tools, or both to show the data features you want your model to recognize on its own. When you annotate an image, you are adding metadata to a dataset. Image annotation is a type of data labeling that is sometimes called tagging, transcribing, or processing. You also can annotate videos continuously, as a stream, or by frame.

How can I do image annotation?

To do image annotation, you can use commercially-available, open source or freeware tools. If you are working with a lot of data, you likely will need a workforce to assist. Tools provide feature sets with various combinations of capabilities, which can be used to annotate visual data, including images and video. There are image annotation services that can provide crowdsourced or managed-team solutions to assist with scaling your process.

What is image annotation for machine learning?

Image annotation for machine learning is the process of labeling or classifying an image using text, drawing tools, or both to show the data features you want your model to recognize on its own. When you annotate an image, you are adding metadata to a dataset. Image annotation is sometimes called data labeling, tagging, transcribing, or processing. You also can annotate videos continuously, as a stream, or by frame.

How can I annotate images for deep learning?

To annotate images for deep learning, you can use commercially-available, open source or freeware tools. If you are working with a lot of data, you likely will need a workforce to assist. Tools provide feature sets with various combinations of capabilities, which can be used to annotate images or video. There are image annotation services that can provide crowdsourced or managed-team solutions to assist with scaling your process. The process of image annotation for machine learning and for deep learning are substantially the same, while the way algorithms are built and trained is different with deep learning.

What are ways to perform image annotation?

Image annotation involves using one or more of these techniques: bounding boxes, landmarking, masking, polygons, polylines, tracking, or transcription. Techniques will be supported by your annotation tool. Tools provide feature sets with various combinations of capabilities, which can be used by your workforce to annotate images or video. There are image annotation services that can provide crowdsourced or managed-team solutions to assist with scaling your process.