With the global natural language processing (NLP) market expected to reach a value of $61B by 2027, NLP is one of the fastest-growing areas of artificial intelligence (AI) and machine learning (ML).

The most common uses of NLP may feel like old news, for instance chatbots, virtual assistants, and voice search. But Gartner identified several emerging technologies that rely on NLP and are sure to shake up some industries:

- AI for marketing. A combination of machine learning, rules-based systems, and NLP form systems that adjust marketing platform strategies on the fly based on contextual consumer responses.

- Digital humans and the metaverse. As the metaverse expands and becomes commonplace, more companies will use NLP to develop and train interactive representations of humans in that space.

- Generative design AI. You may have heard of the AI that creates images based on words typed into the system. Generative AI uses NLP technologies to automatically generate design, content, and code based on user text or speech inputs.

While the future of NLP is exciting, the challenge lies in training algorithms to understand the nuances of natural speech. Can you teach a computer to understand irony, colloquialisms, and context? Can you train algorithms to recognize dialects and accents? Can your solutions deal with the errors and ambiguities in written language?

The answer to each of those questions is a tentative YES—assuming you have quality data to train your model throughout the development process.

NLP models useful in real-world scenarios run on labeled data prepared to the highest standards of accuracy and quality. But data labeling for machine learning is tedious, time-consuming work. You might lack the resources to do the work in-house. Maybe the idea of hiring and managing an internal data labeling team fills you with dread. Or perhaps you’re supported by a workforce that lacks the context and experience to properly capture nuances and handle edge cases.

That’s where a data labeling service with expertise in audio and text labeling enters the picture. Partnering with a managed workforce will help you scale your labeling operations, giving you more time to focus on innovation.

We’ll talk more about how to get your labeling work done later on. For now, let’s start with the basics. If you already know the basics, use the hyperlinked table of contents that follows to jump directly to the sections that interest you.

Ready? Let’s dive in!

Up next: Natural language processing, data labeling for NLP, and NLP workforce options

First, to provide a broad overview of how NLP technology works, we’ll cover the basics of NLP: What is it? How does it work? Why use natural language processing in the first place?

Next, we’ll shine a light on the techniques and use cases companies are using to apply NLP in the real world today.

Finally, we’ll tell you what it takes to achieve high-quality outcomes, especially when you’re working with a data labeling workforce. You’ll find pointers for finding the right workforce for your initiatives, as well as frequently asked questions—and answers.

Table of Contents

Introduction:

Who is this guide for?

This guide is for you if:

- You’re implementing natural language processing techniques and need a closer understanding of what goes into training and data labeling for machine learning.

- You need to prepare high volumes of recorded voice or text data for training natural language processing models.

- You’re interested in learning more about the real-world applications and techniques of natural language processing, machine learning, and artificial intelligence.

The basics of natural language processing

What is natural language processing?

Natural language processing turns text and audio speech into encoded, structured data based on a given framework. It’s one of the fastest-evolving branches of artificial intelligence, drawing from a range of disciplines, such as data science and computational linguistics, to help computers understand and use natural human speech and written text.

Although NLP became a widely adopted technology only recently, it has been an active area of study for more than 50 years. IBM first demonstrated the technology in 1954 when it used its IBM 701 mainframe to translate sentences from Russian into English. Today’s NLP models are much more complex thanks to faster computers and vast amounts of training data.

What are the goals of natural language processing?

The goals of NLP are two-fold:

- To make it easier for people and machines to communicate in more natural and user-friendly ways, and

- To derive meaning and insight from many hours of recorded speech and millions of words of written content.

Today, humans speak to computers through code and user-friendly devices such as keyboards, mice, pens, and touchscreens. NLP is a leap forward, giving computers the ability to understand our spoken and written language—at machine speed and on a scale not possible by humans alone.

Using NLP, computers can determine context and sentiment across broad datasets. This technological advance has profound significance in many applications, such as automated customer service and sentiment analysis for sales, marketing, and brand reputation management.

Common NLP techniques and their applications

Virtual digital assistants like Siri, Alexa, and Google’s Home are familiar natural language processing applications. These platforms recognize voice commands to perform routine tasks, such as answering internet search queries and shopping online. According to Statista, more than 45 million U.S. consumers used voice technology to shop in 2021. These interactions are two-way, as the smart assistants respond with prerecorded or synthesized voices.

Another familiar NLP use case is predictive text, such as when your smartphone suggests words based on what you’re most likely to type. These systems learn from users in the same way that speech recognition software progressively improves as it learns users’ accents and speaking styles. Search engines like Google even use NLP to better understand user intent rather than relying on keyword analysis alone.

NLP also pairs with optical character recognition (OCR) software, which translates scanned images of text into editable content. NLP can enrich the OCR process by recognizing certain concepts in the resulting editable text. For example, you might use OCR to convert printed financial records into digital form and an NLP algorithm to anonymize the records by stripping away proper nouns.

How does natural language processing work?

If you’ve ever tried to learn a foreign language, you’ll know that language can be complex, diverse, and ambiguous, and sometimes even nonsensical. English, for instance, is filled with a bewildering sea of syntactic and semantic rules, plus countless irregularities and contradictions, making it a notoriously difficult language to learn.

Consider:

- The soldier decided to desert his dessert in the desert.

- If teachers taught, why didn’t preachers praught?

- “I” before “e” except after “c”—except in science and weird.

Natural language processing models tackle these nuances, transforming recorded voice and written text into data a machine can make sense of.

Data preparation is a key step in developing an NLP model, which involves organizing training datasets and labeling key semantic elements. Common data preparation methods for NLP include:

- Annotating spoken words.

- Annotating intent.

- Breaking down data into smaller semantic units.

- Tagging specific parts of speech—such as nouns, verbs, and adjectives.

- Standardizing individual words by reducing them to their root forms.

Equipped with enough labeled data, deep learning for natural language processing takes over, interpreting the labeled data to make predictions or generate speech. Real-world NLP models require massive datasets, which may include specially prepared data from sources like social media, customer records, and voice recordings.

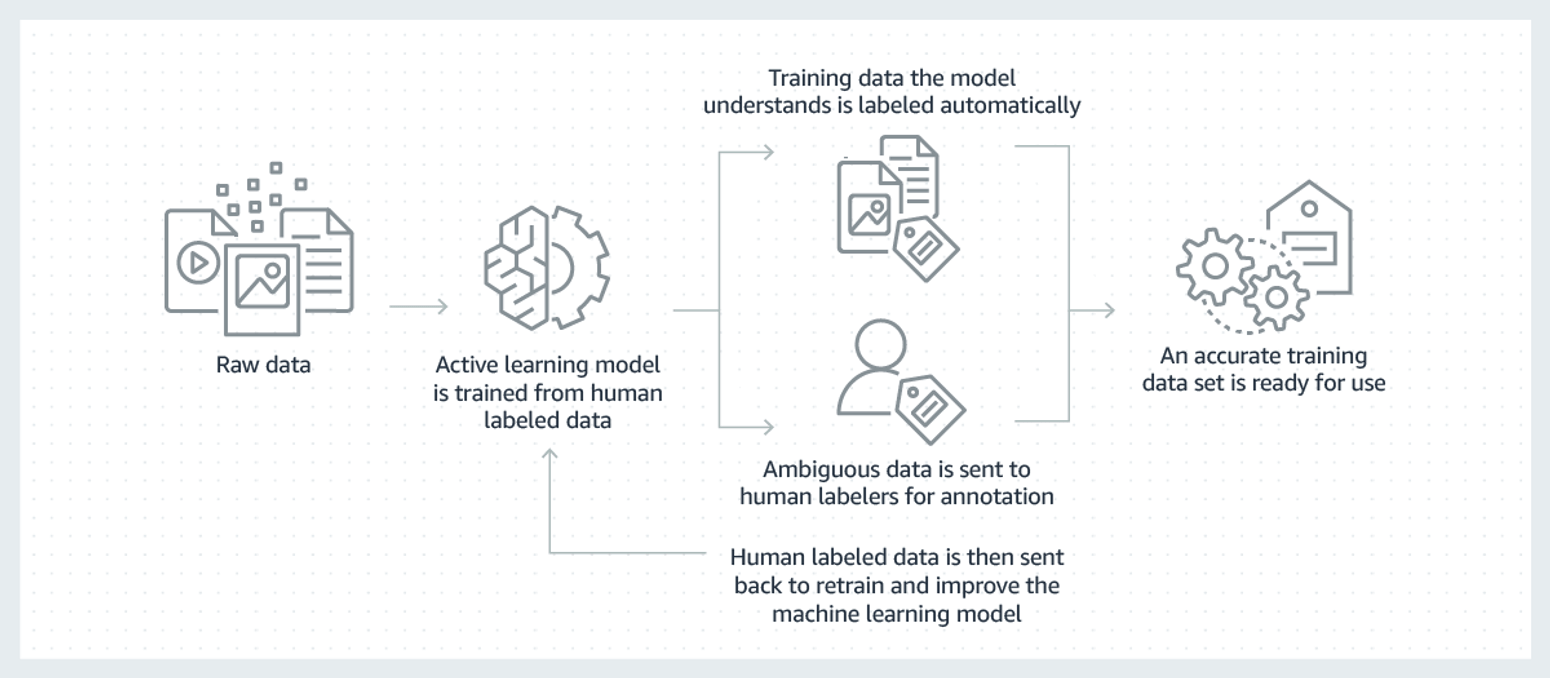

The image that follows illustrates the process of transforming raw data into a high-quality training dataset. First, humans label raw data to train a nascent NLP model. As more data enters the pipeline, the model labels what it can, and the rest goes to human labelers—also known as humans in the loop, or HITL—who label the data and feed it back into the model. After several iterations, you have an accurate training dataset, ready for use.

Image source: AWS

What are the benefits of natural language processing?

NLP helps organizations process vast quantities of data to:

- Streamline and automate operations - Automate data processing and routine tasks, freeing up valuable human resources.

- Empower smarter decision-making - Extracts insights from large datasets to support informed, data-driven strategies.

- Improve customer satisfaction - Powers real-time, personalized support through AI-driven tools like chatbots and sophisticated auto-attendants.

Google Cloud’s Contact Center AI (CCAI) is a human-like, AI-powered contact center platform that “understands, interacts, and talks” in natural language, promising more personalized, intuitive customer care “from the first hello.”

Customers calling into centers powered by CCAI can get help quickly through conversational self-service. If their issues are complex, the system seamlessly passes customers over to human agents. Human agents, in turn, use CCAI for support during calls to help identify intent and provide step-by-step assistance, for instance, by recommending articles to share with customers. And contact center leaders use CCAI for insights to coach their employees and improve their processes and call outcomes.

What is the main challenge of natural language processing?

Having to annotate massive datasets is one challenge. But the biggest limitation facing developers of natural language processing models lies in dealing with ambiguities, exceptions, and edge cases due to language complexity. Without sufficient training data on those elements, your model can quickly become ineffective.

Specifically, developers must overcome challenges around:

- Context. The meaning of words and phrases may change depending on context and audience. Consider the word “flex,” for instance. Older generations might think flex means to bend or be pliable, whereas younger generations use flex to mean to show off. Also consider homonyms, words pronounced and spelled the same but carry different meanings in different contexts. The word “pen” is a homonym. It can mean a writing instrument or a holding area for animals. NLP offers several solutions that cater to context issues, such as part-of-speech tagging and context evaluation.

- Synonyms. The most accurate NLP models understand synonyms and the subtle differences between them. For example, a sentiment analysis model might need to understand the difference in intensity between words like “good” and “fantastic.”

- Sarcasm and irony. Machines aren’t exactly known for speaking creatively or making jokes, so it’s hardly surprising that sarcasm and irony present major challenges. Many developers have studied this issue in recent years, including the team of Potamias, Siolas, and Stafylopatis. Their 2020 study of figurative language and natural language processing used a hybrid neural architecture and recurrent convolutional neural network to compare results against existing models and benchmarked datasets with ironic, sarcastic, and metaphoric expressions, such as ironic tweets and political comments from Reddit. Their method successfully detected figurative language in the datasets, achieving results that outperformed, “...even by a large margin, all other methodologies and published studies.”

- Variations. According to Ethnologue, more than 7,000 languages exist today. British English alone comprises almost 40 dialects; American English accounts for approximately 25 dialects. On top of that, developers must contend with regional colloquialisms, slang, and domain-specific language. For example, an NLP model designed for healthcare will not be effective when applied to legal documentation.

Natural language processing techniques

Many text mining, text extraction, and NLP techniques exist to help you extract information from text written in a natural language. Below are some of the most common techniques our clients use.

Aspect mining is identifying aspects of language present in text, such as parts-of-speech tagging.

Categorization is placing text into organized groups and labeling based on features of interest. Categorization is also known as text classification and text tagging.

Data enrichment is deriving and determining structure from text to enhance and augment data. In an information retrieval case, a form of augmentation might be expanding user queries to enhance the probability of keyword matching.

Data cleansing is establishing clarity on features of interest in the text by eliminating noise (distracting text) from the data. It involves multiple steps, such as tokenization, stemming, and manipulating punctuation.

Entity recognition is identifying text that represents specific entities, such as people, places, or organizations. This is also known as named entity recognition, entity chunking, and entity extraction.

Intent recognition is identifying words that signal user intent, often to determine actions to take based on users’ responses. This is also known as intent detection and intent classification.

Semantic analysis is analyzing context and text structure to accurately distinguish the meaning of words that have more than one definition. This is also called context analysis.

Sentiment analysis is extracting meaning from text to determine its emotion or sentiment. This is also called opinion mining.

Syntax analysis is analyzing strings of symbols in text, conforming to the rules of formal grammar. This is also known as syntactic analysis.

Taxonomy creation is creating hierarchical structures of relationships among concepts in text and specifying terms to refer to each.

Text summarization is creating brief descriptions that include the most important and relevant information contained in text.

Topic analysis is extracting meaning from text by identifying recurrent themes or topics. This is also known as topic labeling.

Use cases for NLP

Chances are that your industry is already using NLP. The technology industry analyst AIMultiple outlines 30 NLP use cases for the healthcare, finance, retail, HR, and cybersecurity industries.

Here are a few ways we’ve seen different industries using natural language processing:

NLP in finance: automating data analysis

Financial services is an information-heavy industry sector, with vast amounts of data available for analyses. Data analysts at financial services firms use NLP to automate routine finance processes, such as the capture of earning calls and the evaluation of loan applications.

Stock traders use NLP to make more informed decisions and recommendations. The NLP-powered IBM Watson analyzes stock markets by crawling through extensive amounts of news, economic, and social media data to uncover insights and sentiment and to predict and suggest based upon those insights.

The insurance industry is diving into NLP, too. Consider Liberty Mutual’s Solaria Labs, an innovation hub that builds and tests experimental new products. Solaria’s mandate is to explore how emerging technologies like NLP can transform the business and lead to a better, safer future.

NLP in legal services: contract review and compliance

Legal services is another information-heavy industry buried in reams of written content, such as witness testimonies and evidence. Law firms use NLP to scour that data and identify information that may be relevant in court proceedings, as well as to simplify electronic discovery.

NLP also automates many time-consuming, burdensome tasks in the legal sector—for example, performing contract reviews, sussing out meaning in regulatory responses, and probating a will, a process that can take years to complete with manual processes but only minutes with machine speed.

Learn how Heretik, a legal machine learning company, used machine learning to transform legal agreements into structured, actionable data with CloudFactory’s help. Our data analysts labeled thousands of legal documents to accelerate the training of its contract review platform.

NLP in customer support: AI Chatbots and Virtual Assistants

Customer service chatbots are one of the fastest-growing use cases of NLP technology. The most common approach is to use NLP-based chatbots to begin interactions and address basic problem scenarios, bringing human operators into the picture only when necessary.

Lemonade Insurance is a good example. Lemonade created Jim, an AI chatbot, to communicate with customers after an accident. If the chatbot can’t handle the call, real-life Jim, the bot’s human and alter-ego, steps in.

The healthcare industry also uses NLP to support patients via teletriage services. In practices equipped with teletriage, patients enter symptoms into an app and get guidance on whether they should seek help. NLP applications have also shown promise for detecting errors and improving accuracy in the transcription of dictated patient visit notes.

Learn how one service-based business, True Lark, deployed NLP to automate sales, support, and marketing communications for their customers after teaming up with CloudFactory to handle data labeling.

NLP in sales and marketing: sentiment analysis & automation

Thanks to social media, a wealth of publicly available feedback exists—far too much to analyze manually. NLP makes it possible to analyze and derive insights from social media posts, online reviews, and other content at scale. For instance, a company using a sentiment analysis model can tell whether social media posts convey positive, negative, or neutral sentiments.

More advanced NLP models can even identify specific features and functions of products in online content to understand what customers like and dislike about them. Marketers then use those insights to make informed decisions and drive more successful campaigns.

The retail industry also uses NLP to increase sales and brand loyalty. In-store, virtual assistants allow customers to get one-on-one help just when they need it—and as much as they need it. Online, chatbots key in on customer preferences and make product recommendations to increase basket size.

Data labeling for NLP explained

Data labeling for machine learning is the process of annotating raw data, such as images, videos, text content, and audio recordings to train machine learning models. Labeling data for natural language processing is one of the most important but time-consuming parts of creating and maintaining models. It encompasses a wide range of tasks, including data tagging, annotation, classification, moderation, transcription, and processing.

What are labels in deep learning?

All supervised deep learning tasks require labeled datasets in which humans apply their knowledge to train machine learning models. Labeled datasets may also be referred to as ground-truth datasets because you’ll use them throughout the training process to teach models to draw the right conclusions from the unstructured data they encounter during real-world use cases. NLP labels might be identifiers marking proper nouns, verbs, or other parts of speech.

How do you annotate a document?

The use of automated labeling tools is growing, but most companies use a blend of humans and auto-labeling tools to annotate documents for machine learning. Whether you incorporate manual or automated annotations or both, you still need a high level of accuracy.

To annotate text, annotators manually label by drawing bounding boxes around individual words and phrases and assigning labels, tags, and categories to them to let the models know what they mean. This labeled data becomes your training dataset.

To annotate audio, you might first convert it to text or directly apply labels to a spectrographic representation of the audio files in a tool like Audacity. For natural language processing with Python, code reads and displays spectrogram data along with the respective labels.

What is an annotation task?

Annotating documents and audio files for NLP takes time and patience. For instance, you might need to highlight all occurrences of proper nouns in documents, and then further categorize those nouns by labeling them with tags indicating whether they’re names of people, places, or organizations.

Common annotation tasks include named entity recognition, part-of-speech tagging, and keyphrase tagging. For more advanced models, you might also need to use entity linking to show relationships between different parts of speech. Another approach is text classification, which identifies subjects, intents, or sentiments of words, clauses, and sentences.

What approach do you use for automatic labeling?

Automatic labeling, or auto-labeling, is a feature in data annotation tools for enriching, annotating, and labeling datasets. Although AI-assisted auto-labeling and pre-labeling can increase speed and efficiency, it’s best when paired with humans in the loop to handle edge cases, exceptions, and quality control.

At CloudFactory, we believe humans in the loop and labeling automation are interdependent. We use auto-labeling where we can to make sure we deploy our workforce on the highest value tasks where only the human touch will do. This mixture of automatic and human labeling helps you maintain a high degree of quality control while significantly reducing cycle times.

Data labeling workforce options and challenges

Text data continues to proliferate at a staggering rate. Due to the sheer size of today’s datasets, you may need advanced programming languages, such as Python and R, to derive insights from those datasets at scale.

For natural language processing (NLP) to be effective, models need large amounts of pre-processed and annotated data. While scaling data labeling is challenging, supervised learning remains a crucial step in model development.

Below are the most common options for scaling data labeling in NLP.

Automation

Today, because so many large structured datasets—including open-source datasets—exist, automated data labeling is a viable, if not essential, part of the machine learning model training process.

But automation is far from perfect. Even AI-assisted auto labeling will encounter data it doesn’t understand, like words or phrases it hasn’t seen before or nuances of natural language it can’t derive accurate context or meaning from. When automated processes encounter these issues, they raise a flag for manual review, which is where humans in the loop come in. In other words, people remain an essential part of the process, especially when human judgment is required, such as for multiple entries and classifications, contextual and situational awareness, and real-time errors, exceptions, and edge cases.

In-house teams

Data labeling is easily the most time-consuming and labor-intensive part of any NLP project. Building in-house teams is an option, although it might be an expensive, burdensome drain on you and your resources. Employees might not appreciate you taking them away from their regular work, which can lead to reduced productivity and increased employee churn. While larger enterprises might be able to get away with creating in-house data-labeling teams, they’re notoriously difficult to manage and expensive to scale.

Crowdsourcing

Natural language processing models sometimes require input from people across a diverse range of backgrounds and situations. Crowdsourcing presents a scalable and affordable opportunity to get that work done with a practically limitless pool of human resources.

But there’s a major downside: Crowdsourced annotators are typically anonymous, making it difficult to maintain labeling consistency, worker accountability, and quality control oversight.

Business process outsourcing

Traditional business process outsourcing (BPO) is a method of offloading tasks, projects, or complete business processes to a third-party provider. In terms of data labeling for NLP, the BPO model relies on having as many people as possible working on a project to keep cycle times to a minimum and maintain cost-efficiency.

While business process outsourcers provide higher quality control and assurance than crowdsourcing, there are downsides. BPO labelers, like crowdsourced labelers, will be anonymous to you. They may move in and out of projects, leaving you with inconsistent labels. BPOs also tend to lack flexibility. If you need to shift use cases or quickly scale labeling, you may find yourself waiting longer than you’d like.

Managed workforces

Managed workforces are more agile than BPOs, more accurate and consistent than crowds, and more scalable than internal teams. They provide dedicated, trained teams that learn and scale with you, becoming, in essence, extensions of your internal teams.

Managed workforces are especially valuable for sustained, high-volume data-labeling projects for NLP, including those that require domain-specific knowledge. Consistent team membership and tight communication loops enable workers in this model to become experts in the NLP task and domain over time.

What to look for in an NLP data labeling service

The best data labeling services for machine learning strategically apply an optimal blend of people, process, and technology.

1. Consider people

Quality starts with the people who do your work. When you hire a partner that values ongoing learning and workforce development, the people annotating your data will flourish in their professional and personal lives. Because people are at the heart of humans in the loop, keep how your prospective data labeling partner treats its people on the top of your mind.

When you’re confident that a workforce is committed to supporting it's people, consider asking:

Do the people have the skill to label the data our model needs to succeed?

An NLP-centric workforce is skilled in the natural language processing domain. Your initiative benefits when your NLP data analysts follow clear learning pathways designed to help them understand your industry, task, and tool.

To sweeten the partnership even more, ask about location:

Does your workforce operate globally?

If your chosen NLP workforce operates in multiple locations, providing mirror workforces when necessary, you get geographical diversification and business continuity with one partner.

Speaking of global presence, and for the topic of NLP, the next thing to ask about is languages:

Can your workforce handle multiple languages?

In our global, interconnected economies, people are buying, selling, researching, and innovating in many languages. Ask your workforce provider what languages they serve, and if they specifically serve yours.

2. Consider the process

Even before you sign a contract, ask the workforce you’re considering to set forth a solid, agile process for your work.

Look for a workforce with enough depth to perform a thorough analysis of the requirements for your NLP initiative—a company that can deliver an initial playbook with task feedback and quality assurance workflow recommendations.

You need this analysis to choose the right workforce. And it’s here where you’ll likely notice the experience gap between a standard workforce and an NLP-centric workforce.

You’ll also want to know how a prospective partner combines processes to work in your favor. Ask:

How do you incorporate automation, AI, and HITL processes into your workflows?

An NLP-centric workforce builds workflows that leverage the best of humans combined with automation and AI to give you the “superpowers” you need to bring products and services to market fast.

Many data annotation tools have an automation feature that uses AI to pre-label a dataset; this is a remarkable development that will save you time and money.

Although automation and AI processes can label large portions of NLP data, there’s still human work to be done. You can’t eliminate the need for humans with the expertise to make subjective decisions, examine edge cases, and accurately label complex, nuanced NLP data.

Speaking of NLP data, here’s the next process-related question to ask a workforce you’re considering:

Is your workforce trained to label NLP data?

An NLP-centric workforce will know how to accurately label NLP data, which due to the nuances of language can be subjective. Even the most experienced analysts can get confused by nuances, so it’s best to onboard a team with specialized NLP labeling skills and high language proficiency.

3. Consider technology

When it comes to data labeling for NLP, there are several types of technology that are needed to get the job done right. When talking labeling technology with a potential workforce, ask:

What tools will you use to label the data in this workflow?

An established NLP-centric workforce is an all-around tooling champion. They use the right tools for the project, whether from their internal or partner ecosystem, or your licensed or developed tool. A tooling flexible approach ensures that you get the best quality outputs.

Next, because metrics matter, ask:

How do you handle performance management?

An NLP-centric workforce that cares about performance and quality will have a comprehensive management tool that allows both you and your vendor to track performance and overall initiative health. As an NLP client, you should be able to see key metrics on demand. And your workforce should be actively monitoring and taking action on elements of quality, throughput, and productivity on your behalf.

And finally, because the success of your projects hinges on communication, ask:

How will our teams communicate?

An NLP-centric workforce will use a workforce management platform that allows you and your analyst teams to communicate and collaborate quickly. You can convey feedback and task adjustments before the data work goes too far, minimizing rework, lost time, and higher resource investments.

And communication isn’t a one-way street. The right messaging channels create a seamless, quality feedback loop between your team and the NLP team lead. You get increased visibility and transparency, and everyone involved can stay up-to-date on progress, activities, and future use cases.

Introducing CloudFactory's NLP-centric workforce

CloudFactory is a workforce provider offering trusted human-in-the-loop solutions that consistently deliver high-quality NLP annotation at scale.

To deploy new or improved NLP models, you need substantial sets of labeled data. Developing those datasets takes time and patience, and may call for expert-level annotation capabilities.

Today, many innovative companies are perfecting their NLP algorithms by using a managed workforce for data annotation, an area where CloudFactory shines.

CloudFactory provides a scalable, expertly trained human-in-the-loop managed workforce to accelerate AI-driven NLP initiatives and optimize operations. Our approach gives you the flexibility, scale, and quality you need to deliver NLP innovations that increase productivity and grow your business.

Why Choose CloudFactory?

For more than a decade, CloudFactory has delivered all the benefits of an in-house team without the high costs and burdens of managing one. Here’s how we make it possible:

We extend your team. Our vetted, managed teams have served hundreds of clients across thousands of use cases that range from simple to complex.

We deliver quality at scale. Our proven processes securely and quickly deliver accurate data and are designed to scale and change with your needs.

We have people who care. Our robust vetting and selection process means that only the top 15% of candidates make it to our clients projects. Rest assured; you’ll have a dedicated and committed team.

Questions to ask a prospective NLP workforce

- How do you screen and select data analysts for your workforce?

- Does your workforce receive NLP training?

- What investments do you make in workforce development?

- Is your workforce distributed globally?

- What time zones are they in?

- What languages do you cover?

- Do you have clear disaster recovery, outage, and business continuity plans?

- Do you have the proven agility to scale projects up or down quickly?

- Will you provide an analysis or pilot program before we sign a contract with you?

- When preparing training data, do you incorporate automation, AI assistance, and HITL?

- What tools does your workforce use to label data?

- How will our team communicate with your analysts?

- How do you report on quality, throughput, and productivity metrics?

- Do you offer dedicated project managers?

- Do you offer customer success managers?

Contact Sales

Fill out this form to speak to our team about how CloudFactory can help you reach your goals.

Frequently Asked Questions

What is natural language processing (NLP)?

Natural language processing is a form of artificial intelligence that focuses on interpreting human speech and written text. NLP can serve as a more natural and user-friendly interface between people and computers by allowing people to give commands and carry out search queries by voice. Because NLP works at machine speed, you can use it to analyze vast amounts of written or spoken content to derive valuable insights into matters like intent, topics, and sentiments.

How does natural language processing work?

Natural language processing algorithms allow machines to understand natural language in either spoken or written form, such as a voice search query or chatbot inquiry. An NLP model requires processed data for training to better understand things like grammatical structure and identify the meaning and context of words and phrases. Given the characteristics of natural language and its many nuances, NLP is a complex process, often requiring the need for natural language processing with Python and other high-level programming languages.

Is natural language processing part of machine learning?

Natural language processing is a subset of artificial intelligence. Some, but not all, NLP techniques fall within machine learning. Modern NLP applications often rely on machine learning algorithms to progressively improve their understanding of natural text and speech. NLP models are based on advanced statistical methods and learn to carry out tasks through extensive training. By contrast, earlier approaches to crafting NLP algorithms relied entirely on predefined rules created by computational linguistic experts.

What are natural language processing techniques?

Natural language processing extracts relevant pieces of data from natural text or speech using a wide range of techniques. One of these is text classification, in which parts of speech are tagged and labeled according to factors like topic, intent, and sentiment. Another technique is text extraction, also known as keyword extraction, which involves flagging specific pieces of data present in existing content, such as named entities. More advanced NLP methods include machine translation, topic modeling, and natural language generation.

Where is NLP used?

The most common use case for NLP is voice-controlled smart assistants, such as Apple Siri or Amazon Alexa, which let users interact with computers simply by speaking to them. Another common use case is chatbots in customer support, sales, and marketing. These provide a natural, albeit AI-powered way for customers to resolve issues and queries quickly rather than waiting for human representatives. More advanced use cases include sentiment analysis for qualifying customer feedback across social media and online review sites, uncovering sales signals in inbound and outbound calls, and classifying and prioritizing incoming emails.

Why is natural language processing difficult?

Language is complex and full of nuances, variations, and concepts that machines cannot easily understand. Many characteristics of natural language are high-level and abstract, such as sarcastic remarks, homonyms, and rhetorical speech. The nature of human language differs from the mathematical ways machines function, and the goal of NLP is to serve as an interface between the two different modes of communication.

What is data labeling?

In machine learning, data labeling refers to the process of identifying raw data, such as visual, audio, or written content and adding metadata to it. This metadata helps the machine learning algorithm derive meaning from the original content. For example, in NLP, data labels might determine whether words are proper nouns or verbs. In sentiment analysis algorithms, labels might distinguish words or phrases as positive, negative, or neutral.

Why is data labeling important?

Labeled data is essential for training a machine learning model so it can reliably recognize unstructured data in real-world use cases. The more labeled data you use to train the model, the more accurate it will become. Data labeling is a core component of supervised learning, in which data is classified to provide a basis for future learning and data processing. Massive amounts of data are required to train a viable model, and data must be regularly refreshed to accommodate new situations and edge cases.