To develop ML models with large amounts of data, you must combine people, processes, and technology to enhance the data. This will allow you to train, validate, and adjust your model.

If your team is like most, you do most of your data annotating work in-house. Or, you might be crowdsourcing.

Either way, are you ready to outsource data labeling and hire a data annotation provider?

If so, this guide will teach you everything you need to know about data labeling. You'll learn about technology, terminology, best practices, and the top questions to ask potential service providers.

Read the full guide below, or download a PDF version of the guide you can reference later.

Introduction:

Will this guide be helpful to me?

Let’s get a handle on why you’re here. This guide is for you if you have data to label for ML, are facing common challenges, and are considering hiring a top data labeling company.

What challenges do data annotation companies help to overcome?

-

You have a lot of unlabeled data.

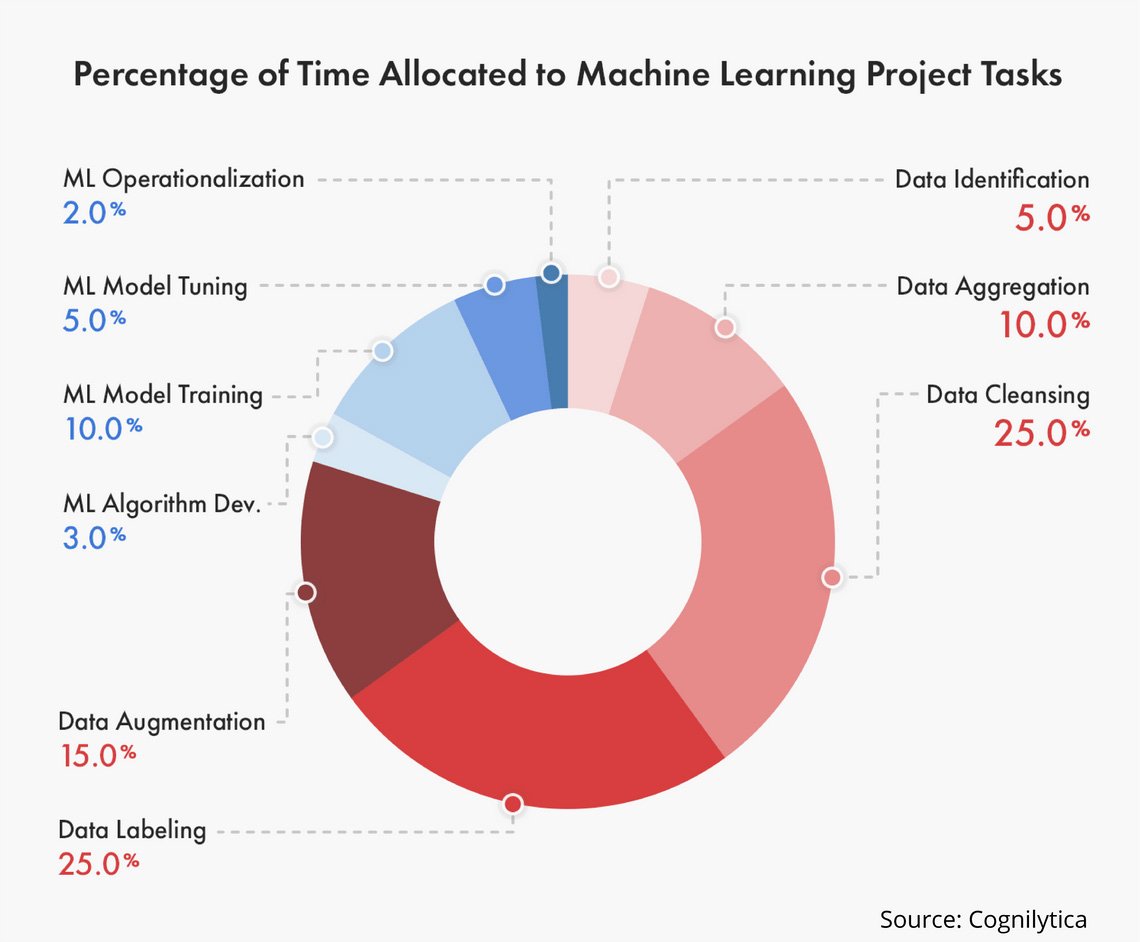

Most data is raw (not in labeled form). And that’s the challenge for most AI project teams as they're racing to usable data. According to analyst firm Cognilytica, gathering, organizing, and labeling data consumes 80% of AI project time.

(Source: Dzone)

-

Your data labels are of low quality.

This is likely the case if you’ve been crowdsourcing your data labeling. Crowdsourcing uses anonymous, unvetted workers, resulting in lower quality and inconsistencies across datasets.

-

You want to scale your data labeling operations.

Volume is growing and you need to expand your capacity. You may be doing the work in-house, using contractors, or crowdsourcing. These methods make data labeling difficult and expensive to scale.

-

Your data labeling process is inefficient or costly.

If you’re paying your data scientists to wrangle data, looking for another approach is smart. Salaries for data scientist specialists can cost up to $190,000/year. It’s expensive to have some of your highest-paid resources spending time on basic, repetitive work.

-

You want to add or enhance your data labeling QA process.

This is an often-overlooked area of data labeling that can provide significant value, particularly during the iterative ML model training, validation, and testing stages.

Let's get started:

The basic data annotation lingo

What is computer vision?

Computer vision is a field of computer science that deals with how computers can be made to gain a high-level understanding of digital images or videos. Simply put, it's the ability of computers to understand and interpret what they see.

Computers can use cameras and sensors to recognize objects, comprehend scenes, and make choices using visual information.

What is data labeling?

In ML, if you have labeled data, that means a data labeler has marked up or annotated data to show the target, which is the answer you want your machine learning model to predict. Data labeling can generally refer to tasks that include data tagging, annotation, classification, moderation, transcription, or processing.

What is data annotation?

Data annotation generally refers to the process of labeling data. Data annotation and data labeling are often used interchangeably, although they can be used differently based on the industry or use case.

Labeled data calls out data features - properties, characteristics, or classifications - that can be analyzed for patterns that help predict the target.

In computer vision retail shelf analysis, a data labeler can use image-by-image labeling tools. These tools help to show where products are located.

They also indicate if products are out of stock. Additionally, they can identify if there are promotional displays. Lastly, they can detect if price tags are incorrect.

Or, in computer vision for satellite image processing, a data labeler can use image-by-image labeling tools to identify and segment solar farms, wind farms, bodies of water, and parking lots.

What is training data in machine learning?

Training data is the enriched data you use to train an ML algorithm.

This is different from test data, which is a sample of the data or a dataset that you can use to evaluate the fit for your training dataset within your ML model.

What are the labels in machine learning?

Labels are what the human in the loop uses to identify and call out features that are present in the data. It’s critical to choose informative, discriminating, and independent features to label if you want to develop high-performing algorithms in pattern recognition, classification, and regression. In machine learning, the process of choosing the features you want to label is highly iterative and deeply influenced by your workforce choice.

What is human in the loop?

Human in the loop (HITL) is a way of designing AI systems that integrate humans into the process. This can be done at any stage, from collecting and labeling data to training, evaluating, and deploying the system into production.

HITL systems are often used for data labeling tasks that machines cannot perform independently, such as detecting objects in images or transcribing audio recordings.

By incorporating human feedback, HITL systems can produce more accurate and reliable labeled datasets, leading to better-performing machine learning models.

What is ground truth data?

Accurately labeled data can provide ground truth for testing and iterating your models.

“Ground truth” is borrowed from meteorology, which describes on-site confirmation of data reported by a remote sensor, such as a Doppler radar.

In ML and computer vision, ground truth data refers to accurately labeled data that reflects the real-world condition or characteristics of an image or other data point. Researchers can use ground truth data to train and evaluate their AI models.

This ground truth data is used as a standard to test and validate algorithms in image recognition or object detection systems.

From an ML perspective, ground truth data is one of two things:

- An image that is annotated with the highest quality for use in machine learning. For example, a data labeler annotating an image that shows soup cans on a retail shelf accurately and precisely labels the cans of a brand’s soup and those of its competitors. The worker’s exact labeling of those features in the data establishes ground truth for that image.

- An image used for comparison or context to establish ground truth for another image. For example, a data labeler can use a high-resolution panoramic image of a grocery store to inform the labeling for other, lower-resolution images of a display shelf in the same store.

How are companies labeling their data?

Organizations use a combination of software tools and people to label data. In general, you have five options for your data labeling workforce:

- Employees - They're on your payroll, either full-time or part-time. Their job description includes data labeling. They may be on-site or remote.

- Contractors - They're temporary or freelance workers (e.g., Upwork).

- Crowdsourcing - You use a third-party platform to access large numbers of workers at once (e.g., Amazon Mechanical Turk).

- BPOs - General business process outsourcers (BPOs) have many workers but may lack the expertise or commitment needed for data annotation tasks.

- Managed teams - You leverage managed teams for vetted, trained data labelers (e.g., CloudFactory).

CloudFactory has been annotating data for over a decade. Over that time, we’ve learned how to combine people, process, and technology to optimize data labeling quality.

If you want to maximize ROI for your company by outsourcing data work, then you need to bookmark Your Guide to AI and Automation Success Through Outsourcing, which provides tips on managing vendor relationships and setting them up for long-term success.

Five factors to consider when labeling data for ML

The following section details the five factors every team needs to consider when navigating the process of data labeling: quality, scale, process, security, and tools. It will equip you with the knowledge to master this crucial step and build successful ML projects.

Factor one: Data Quality

The first factor to consider when you need to label data for ML is data quality. A data labeling service should be able to demonstrate that they have a strong track record in providing high-quality data.

What affects quality and accuracy in data labeling?

You already know your ML model is only as good as the data it's trained on.

Low-quality data can backfire and ultimately jeopardize the effectiveness and quality of the models you put into production.

To create, validate, and maintain production for high-performing ML models, you must train them using trusted, reliable data.

Keep in mind: Accuracy and quality are two different things.

- Accuracy in data labeling measures how close the labeling is to ground truth or how well the labeled features in the data are consistent with real-world conditions. This is true whether you’re building computer vision models (e.g., doing semantic segmentation or putting bounding boxes around objects on street scenes) or natural language processing (NLP) models (e.g., classifying text for social sentiment), or even generative AI models (e.g., generating images).

- Quality in data labeling is about accuracy across the overall dataset. Does the work of all of your data labelers look the same? Is labeling consistently accurate across your datasets? This is relevant whether you have 29, 89, or 999 data annotators working simultaneously.

Four workforce traits that affect quality in data labeling

With over a decade of experience providing managed data labeling teams for startup, growth, and enterprise companies, we’ve learned four workforce traits that affect data labeling quality for ML projects: expertise, agility, relationship, and communication.

-

Expertise

In data labeling, expertise is essential for your workforce to create high-quality, structured datasets for ML. This is what we call the art and science of digital work. It's knowing how to integrate people, processes, and technology to achieve your desired business outcomes.

There will be trade-offs, and your data labeling service should be able to help you think through them strategically. For example, you’ll want to choose the right QA model that balances quality and cost with your business objectives.

We’ve learned workers label data with far higher quality when they have the context or know about the setting or relevance of the data they're labeling.

For example, people labeling your text data should understand when certain words may be used in multiple ways, depending on the meaning of the text.

To tag the word “bass” accurately, they will need to know if the text relates to fish or music. They might need to understand how words may be substituted for others, such as “Kleenex®” for “tissue.”

For the highest quality data, labelers should know key details about the industry you serve and how their work relates to the problem you are solving.

If you want to create the best data annotation guidelines for your data labelers that drive accurate ML models, then don't miss this article.

It’s even better when a member of your labeling team has domain expertise or a deep understanding of the industry your data serves so they can manage the team and train new members on rules related to context, the business, and edge cases.

For example, the vocabulary, format, and style of text related to retail can vary significantly from that of the geospatial industry.

-

Agility

ML is an iterative process. Data labeling evolves as you test and validate your models and learn from their outcomes, so you’ll need to prepare new datasets and enrich existing datasets to improve your algorithm’s results.

Your data labeling team should have the flexibility to incorporate changes that adjust to your end users’ needs, changes in your product, or the addition of new products.

A flexible data labeling team can react to changes in data volume, task complexity, and task duration. The more adaptive to change your labeling team is, the more ML projects you can work through.

As you develop algorithms and train your models, data labelers can provide valuable insights about data features - the properties, characteristics, or classifications that will be analyzed for patterns that help predict the target or answer you want your model to predict.

-

Relationship

In ML, your workflow changes constantly. You need data labelers who can respond quickly and make changes in your workflow based on what you’re learning in the model testing and validation phase.

To succeed in this agile environment, you need a data labeling company that can adapt to the processes for your unique use case while also having the experience and expertise to know what processes are needed in the first place.

It’s even better if you have a direct connection to a leader on your data labeling team so you can iterate data features, attributes, and workflow based on what you’re learning in the testing and validation phases of ML.

-

Communication

Direct communication between your project team and labeling team is essential. A closed feedback loop is an excellent way to establish reliable collaboration.

Labelers should be able to share their insights and feedback on common edge cases as they label the data so you can use their insights to improve your approach. When multiple teams are involved, each should have a dedicated team lead or project manager to coordinate with the data annotators.

CloudFactory clients rave about our closed feedback loop with data labeling teams. Glenn Smith, COO and head of product at CognitionX, said this about our approach, “They use a cloud portal to give transparency of their progress. With its native chat functionality, it was easy to communicate with the relevant team members and for them to contact us with questions.”

How is quality measured in data labeling?

There are four ways we measure data labeling quality from a workforce perspective:

- Gold standard - There’s a correct answer for the task. Measure quality based on correct and incorrect tasks.

- Sample review - Select a random sample of completed tasks. A more experienced worker, such as a team lead or project manager, reviews the sample for accuracy.

- Consensus - Assign several people to do the same task, and the correct answer is the one that comes back from the majority of labelers.

- Intersection over union (IoU) - This is a consensus model used in object detection in images. It combines people and automation to compare the bounding boxes of your hand-labeled, ground truth images with the predicted bounding boxes from your model. To calculate IoU, divide the area where the bounding boxes overlap by the area of the combined bounding boxes or the union.

You'll want the freedom to choose from these quality assurance methods instead of being locked into a single model to measure quality. At CloudFactory, we use one or more of these methods on each project to measure the work quality of our own data labeling teams.

Some of our clients bring exact accuracy specifications for their work. For example, in one data labeling use case for machine learning, our managed teams label data to train algorithms that identify counterfeit retail products.

The combination of the labeling tool, our technology platform, and our managed-team approach made it possible to iterate the process, resulting in better team morale, higher productivity, and, most importantly, near 100% accuracy.

Critical questions to ask your data labeling service about data quality:

- How will our team communicate with your data labeling team?

- Will we work with the same data labelers over time? If workers change, who trains new team members? Describe how you transfer context and domain expertise as team members transition on/off the data labeling team.

- Is your data labeling process flexible? How will you manage changes or iterations from our team that affect data features for labeling?

- What standard do you use to measure quality? How do you share quality metrics with our team? What happens when quality measures aren’t met?

Factor two: Scalability

The second factor to consider when you need to label data for ML is scale. You want an elastic capacity to scale your workforce up or down according to your project and business needs without compromising data quality.

What happens when your data labeling volume increases?

Data labeling is a time-consuming process, and it’s even more so in ML, which requires you to iterate and evolve data features as you train and tune your models to improve data quality and model performance.

As the complexity and volume of your data increase, so will your need for labeling. Video annotation, for example, is especially labor-intensive. In fact, each hour of video data collected takes about 800 human hours to annotate. A 10-minute video contains between 18,000 and 36,000 frames, about 30-60 per second.

How do I know when to scale data labeling?

It's time to scale if data labeling is consuming the time of your high-value resources like data scientists and ML engineers or if data volumes are outgrowing your in-house capabilities.

Data scientists can command extremely high hourly rates. Instead of dedicating their expertise to tasks like data labeling, utilizing their skills for strategic analysis and extracting business insights from the data is more effective.

And if data volumes grow over weeks or months, the demands of manual labeling can strain your in-house capabilities, leading to delays and potential bottlenecks in your ML and data analysis projects.

To effectively address these challenges, consider adopting scalable solutions such as a data annotation company that can handle the growing data labeling needs without overburdening your internal resources.

Five steps to scale data labeling

-

Design for workforce capacity. A data labeling service can access a large pool of qualified workers. Crowdsourcing can, too, but there's a great risk that anonymous workers will deliver lower-quality data than managed teams on identical data labeling tasks.

Your best bet is working with the same team of labelers because as their knowledge of your business rules, context, and edge cases increases, data quality will improve. They also can train new people as they join the team.

This is especially helpful with data labeling for ML projects, where quality and flexibility to iterate are essential.

-

Look for elasticity. to scale labeling up or down. For example, you may have to label data in real time based on the volume of incoming data generated by your products’ end users.

Perhaps your business has seasonal spikes in purchase volume over certain weeks of the year before gift-giving holidays.

Or, during the growing season, your company may need to label a large volume of data in order to keep its models up-to-date. You'll want a workforce that can adjust scale based on your specific needs.

Product launches can also generate significant spikes in data labeling volume.

CloudFactory has extensive experience helping clients manage these spikes. For example, we recently assisted a client with a product launch that required 1,200 hours of data labeling over five weeks. We completed this intense burst of work on time and within budget, allowing our client to focus on other aspects of their product launch.

Shifts in client needs and use cases can also affect data labeling volume.

We recently worked with a client who needed to provide innovative mobile solutions to test and develop new retail AI use cases quickly. Launching a minimum viable product (MVP) was essential, but the costs and resources required for internal labeling software and managing a team of labelers were a hurdle. By employing CloudFactory's Accelerated Annotation, the client successfully launched their MVP within two months, maintaining their competitive edge.

-

Choose smart tooling. Whether you buy it or build it yourself, the data annotation tool you choose will significantly influence your ability to scale data labeling - even to the point of utilizing the automated data labeling approaches.

It’s a progressive process; today's data labeling tasks may look different in a few months.

Whether you’re growing or operating at scale, you’ll need a tool that gives you the flexibility to grow with you as you mature processes, iterate on the labels that drive the best model performance, and move into production.

While tools built in-house give you the utmost control over their workflow and capabilities, commercially available tools have matured to be highly flexible and customizable while including best practices learned over thousands of use cases.

Using a third-party tool allows you to focus on growing and scaling your data labeling operations without the overhead of maintaining an in-house tool.

-

Measure worker productivity. Productivity is influenced by three aspects of your data labeling workforce: volume of completed work, quality of the work (accuracy plus consistency), and worker engagement.

On the worker side, process excellence is the goal. Combining technology, workers, and coaching shortens labeling time, increases throughput, and minimizes downtime. We have found data quality is higher when we place data annotators in small teams, train them on your tasks and business rules, and show them what quality work looks like.

Small-team leaders encourage collaboration, peer learning, support, and community building. Workers’ skills and strengths are known and valued by their team leads, who allow workers to grow professionally.

The small-team approach and a smart tooling environment result in high-quality data labeling. Workers use the closed feedback loop to deliver valuable insights to the machine learning project team that can improve labeling quality, workflow, and model performance.

Worker productivity is greatly enhanced when your labeling processes include a workforce management platform that can do task matching based on worker skills, supply-demand management for enabling flexible distribution and management of large volumes of concurrent tasks, performance feedback to support continuous improvement of labelers, and a secure workspace to adhere to the strictest of security practices.

-

Streamline communication between your project and data labeling teams. Organized, accessible communication with your data labeling team makes it easier to scale the work.

We use a closed feedback loop for communication with your labeling team so you can make impactful changes fast, such as changing your labeling workflow or iterating data features.

When data labeling directly powers your product features or customer experience, labelers’ response time must be fast, and communication is key. Data labeling service providers should be able to work across time zones and optimize their communication for the time zone that affects the end user of your machine learning project.

Critical questions to ask your data labeling service about scale:

- Describe the scalability of your workforce. How many data annotators can we access at any one time? Can we scale data labeling volume up or down based on our needs? How often can we do that?

- How do you measure worker productivity? How long does it take a team of your data labelers to reach full throughput? Is task throughput impacted as your data labeling team scales? Do increases in throughput as the team scales impact data quality?

- How do you handle iterations in our data labeling features and operations as we scale?

- Tell us about the client support we can expect once we engage with your team. How often will we meet? How much time should my team plan to spend managing the project?

Factor three: The Process

We think of data labeling operations as an integration between people, process, and technology. Data is your raw material, and you must move it through multiple steps and quality assurance for it to be useful in ML. Delivering high accuracy consistently requires a strategic and collaborative approach.

What is integrative data labeling?

Integrative data labeling is a holistic approach that combines skilled human oversight, advanced technology, and streamlined processes to produce high-quality, labeled datasets. It ensures that tasks requiring human intuition, such as context understanding and complex annotations, are seamlessly integrated with automated tools that handle repetitive or large-scale tasks.

This balance is what enables accuracy, scalability, and efficiency in your labeling operations while allowing you to maintain the flexibility to adapt to evolving project needs.

What are the four essential elements of integrative data labeling?

The following four elements are essential to design your data labeling pipeline for quality, throughput, and scale.

-

Apply technology

Integrative data labeling is your tech-and-human stack. You’ll want to assign people tasks that need domain subjectivity, context, and adaptability.

Give machines tasks that are better done with repetition, measurement, and consistency. You can also use technology to streamline communication with your labeling team, which leads to higher accuracy in labeling.

-

Use a managed workforce

Teams that are vetted, trained, and actively managed deliver higher skill levels, engagement, accountability, and quality.

Unlike an anonymous team like you get with crowdsourcing, the more managed teams work with your data, the more context they gain and the better they understand your model. This continuity leads to more productive workflows and higher-quality training data.

-

Measure quality

Data quality determines model performance. Consider how important quality is for your tasks today and how that could evolve over time.

-

Design for flexibility

As you move through the process of ML, you’ll want the agility to add data features to your labeling so you can advance your model training, testing, and validation.

What are the data labeling jobs to be done?

The data labeling portion of integrative data labeling will include a wide array of tasks:

- Labeling data

- Quality assurance for data labeling

- Process iteration, such as changes in data feature selection, task progression, or QA

- Management of data labelers

- Training of new team members

- Project planning, process operationalization, and measurement of success

Critical questions to ask your data labeling service about process:

- Is your data labeling process integrative? If so, how?

- How do you apply and leverage technology across your processes and data labeling resources?

- How will my company be able to communicate with your data labelers?

- Are your data labelers vetted and trained on my company's specific use cases and labeling needs?

- How do you measure the quality of your data labels to ensure the ML models we train are effective?

- Can your people, processes, and technology scale up and down based on my company's needs?

Factor four: Data Security

The fourth factor to consider when you need to label data for machine learning is data security. A data labeling service should comply with regulatory or other requirements based on the level of security your data requires.

What are the security risks of data labeling outsourcing?

Your data labeling service can compromise security when their workers:

- Access your data from an insecure network or use a device without malware protection.

- Download or save some of your data (e.g., screen captures, flash drive).

- Don't have formal contracts in place, such as an NDA.

- Don't have an established remote work policy.

- Don’t have training, context, or accountability related to security rules for your work.

- Work in a physical or digital environment that isn't certified to comply with data regulations your business must observe (e.g., HIPAA, SOC 2).

Security and your data labeling workforce

If data security and privacy are factors in your ML process, your data labeling service must have the right training, policies, and processes in place - and they should have the certifications to show their process has been reviewed and your data will remain safe.

With the right approach, you can get data security from a third-party equivalent to what you'd get with in-house data labeling--preventing your data from being used to inform and train other models outside your own company.

Most importantly, your data labeling service must respect data like you and your organization do. They also should have a documented data security policy in all of these four areas:

-

People and workforce: This could include background checks for workers and may require labelers to sign a non-disclosure agreement (NDA) or similar document outlining your data security requirements. The workforce could be managed or measured for data security compliance. It may include worker training on security protocols related to your data.

-

Technology and network: Workers may be required to turn in devices they bring into the workplace, such as a mobile phone or tablet. Download or storage features may be disabled on devices workers use to label data. There’s likely to be significantly enhanced network security regardless of whether or not the worker is on-premise or remote.

-

Facilities and workspace: Workers may sit in a space that blocks others from viewing their work. They may work in a secure location, with badged access that allows only authorized personnel to enter the building or room where data is being labeled. Video monitoring may be used to enhance physical security for the building and the room where work is done.

-

Data ownership: This includes having the right data security policies in place to ensure your company retains the rights to the data and labels specific to your use cases and projects.

Security concerns shouldn’t stop you from using a data labeling service that will free up you and your team to focus on the most innovative and strategic parts of ML: model training, algorithm development, and model production.

Click here to learn more about CloudFactory’s data security.

Critical questions to ask your data labeling service about security:

- Will you use my labeled datasets to create or augment other datasets and make them available to third parties?

- For our required level of security, do you have a secure labeling platform where network and security requirements are in place?

- How do you screen and approve workers to work in those facilities?

- What kind of data security training do you provide to workers?

- What kind of network security do you have in place?

- What happens when new people join the team?

- If I require work to be done in a secure facility, what measures will you take to secure the facilities where our work is done? Do you use video monitoring in work for projects requiring higher security levels?

- How do you protect data that’s subject to regulatory requirements, such as HIPAA or GDPR? What about personally identifiable information (PII)?

Factor five: Tools for smarter annotation

The fifth factor to consider when you need to label data for ML is tooling. A data labeling service should be able to provide recommendations and best practices in choosing and working with data labeling tools.

It's best if they offer an integrated, full-stack annotation solution, giving you a seamless experience across people, process, and technology.

To label production-grade training data for ML, you need both smart software tools and skilled humans in the loop. Assign people tasks that require context, creativity, and adaptability while giving machines tasks that require speed, measurement, and consistency.

A recent article from McKinsey Digital highlights the benefits of human-machine collaboration. The article argues that now is the time to optimize teams composed of machines and people. Companies can supercharge their innovation and performance by thinking of machines as partners instead of servants or tools.

Choosing a data labeling tool: Six steps

We’ve learned these six steps are essential in choosing your data labeling tool to maximize data quality and optimize your workforce investment:

-

Narrow tooling based on your use case. Your data type will determine the tools available to use. Tools vary in data enrichment features, quality (QA) capabilities, supported file types, data security certifications, storage options, and more. Features for labeling may include bounding boxes, polygons, masks, 2-D and 3-D points, tags, attributes, and more.

-

Compare the benefits of build vs. buy. Building your own tool can offer valuable benefits, including more control over the labeling process, software changes, and data security, but you should only consider building if it adds unique intellectual property to the overall value proposition offered to your customers.

Otherwise, buying is the way to go.

Buying a commercially viable tool will generally be less costly, freeing up valuable capital for other aspects of your ML project. You can configure the tool for the features you need, and user support is provided.

Tooling has advanced, so buying a tool is usually the best choice. There's more than one commercially viable tool available for any data labeling workload, and teams are developing new tools and advanced features all the time.

When you buy, you’re essentially leasing access to the tools, which means:

- There are funded entities that are vested in the success of that tool.

- You can use more than one tool based on your needs.

- Your tool provider supports the product, so you don’t have to spend valuable engineering resources on tooling.

-

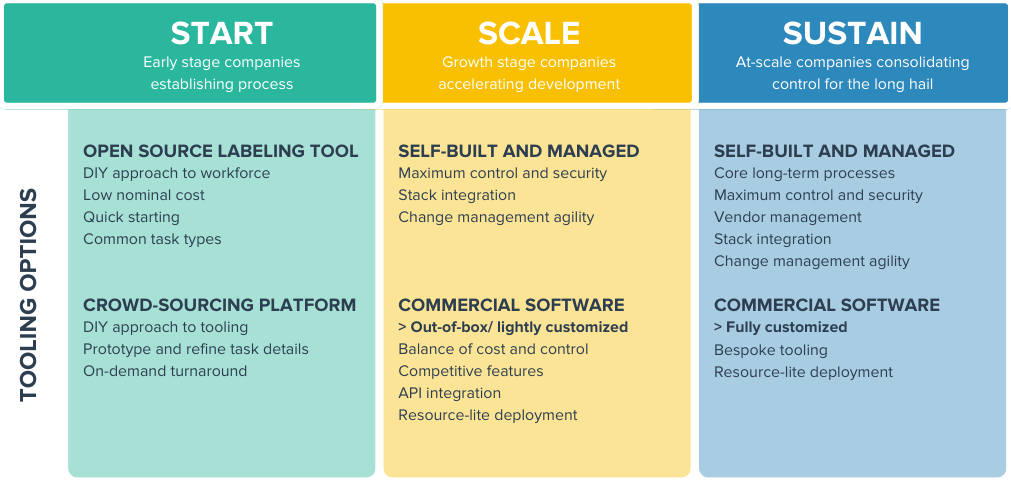

Consider your organization’s size and growth stage. We’ve found company stage and AI maturity to be important factors in choosing your tool.

-

Establishing your workforce: If your organization is small or you are just establishing your labeling process, you’ll have to find your own workforce. You can choose a commercially viable tool. Or, choose crowdsourcing and leverage the vendor’s tooling platform. Keep in mind that crowdsourced data labelers will be anonymous, so context and quality are likely to be pain points.

-

Scaling the process: If you are in the growth stage, commercially viable tools are likely your best choice. You can lightly customize, configure, and deploy competitive features with little to no development resources.

Look for a data annotation provider that can get models into production faster by scaling training data as needed with AI-powered auto labeling that learns quickly and boosts the speed and accuracy of annotations.

If you prefer, open-source tools give you more control over security, integration, and flexibility to make changes. Remember, building the tool is a big commitment: you’ll invest in maintaining that platform over time, and that can be costly.

-

Sustaining scale: If you are operating at scale and want to sustain that growth over time, you can get commercially viable tools that are fully customized and require few development resources.

Seek out a data labeling company that uses active management to continuously monitor the quality of labeled data and provide feedback to labelers or adjust labeling guidelines as needed. This iterative process helps maintain high-quality data at scale over time.

If you go the open-source route, be sure to create long-term processes and stack integration that will allow you to leverage any security or agility advantages you want to leverage.

-

-

Don't decouple the data labelers from the data labeling platform. For the most flexibility, control, and security over data and processes, ensure the workforce and labeling platform are closely tied and integrated. Your workforce choice, coupled with the labeling platform, can make or break the quality of your labeled data, thus impacting model training success.

-

Factor in your data quality requirements. Quality assurance features are built into some tools, and you can use them to automate a portion of your QA process. A few managed workforce providers can provide trained workers with extensive experience with labeling tasks, which produces higher-quality training data.

-

Beware of contract commitment implications. Ensure the vendor offers annual contracts based on relevant consumption models, workforce management, contractual SLAs, predictable spend, and commercial terms for annual/multi-year commitments. Look for contracts that are flexible enough to allow you to scale your data labeling needs up or down as needed.

Critical questions to ask your data labeling service about tools:

- Do you provide a data labeling tool? Can I access your workforce without using the tool?

- What labeling tools, use cases, and data features does your team have experience with?

- How would you handle data labeling tool changes as our data labeling needs change? Does that have an adverse impact on your data labeling team?

- Describe how you handle quality assurance and how you layer it into the data labeling task progression. How involved in QA will my team need to be?

TL;DR

If you're ready to hire a data labeling service, you'll need to understand the available technology, best practices, and questions to ask prospective providers.

Here’s a quick recap of what we’ve covered, with reminders about what to look for when you’re hiring a data labeling service.

-

Ensure High-Quality Labeling

Data quality is a critical factor to consider when labeling data for machine learning. It's important to choose a data labeling service that has a strong track record of providing high-quality data.

There are four workforce traits that affect quality in data labeling: expertise, agility, relationship, and communication. Data annotators should have the expertise to understand the data they're labeling and the context in which it will be used. They should also be agile and able to adapt to changes in the data labeling process. Additionally, data labelers should have a good relationship with the project team and be able to communicate effectively.

There are four ways to measure quality in data labeling: gold standard, sample review, consensus, and intersection over union (IoU). The best method for measuring quality will vary depending on the project and the type of data being labeled.

-

Scale Yout Data Labeling Operations Effectively

You want an elastic capacity to scale your workforce up or down according to your project and business needs without compromising data quality.

Here are five steps to scale data labeling:

- Design for workforce capacity. Work with a data labeling service that can access a large pool of qualified workers. Consider working with the same team of labelers over time, as their knowledge of your business rules, context, and edge cases will improve data quality.

- Look for elasticity to scale labeling up or down. You may need to label data in real time or during seasonal spikes in purchase volume. Choose a workforce that can adjust scale based on your specific needs.

- Choose smart tooling. Use a data annotation tool flexible enough to grow with you as you mature processes, iterate on the labels that drive the best model performance, and move into production.

- Measure worker productivity. Productivity is influenced by the volume of completed work, quality of the work, and worker engagement. Consider using a workforce management platform to help you manage task matching, supply-demand management, performance feedback, and security.

- Streamline communication between your project and data labeling teams. Use a closed feedback loop for communication with your labeling team so you can make impactful changes fast.

-

Streamline Everything with Integrative Processes

Integrative data labeling is a strategic approach to data labeling that integrates people, process, and technology to deliver high-quality data at scale. It's essential for designing a data labeling pipeline for quality, throughput, and scale.

Four essential elements of integrative data labeling:

- Apply technology to assign tasks to people and machines based on their strengths.

- Use a managed workforce to deliver higher skill levels, engagement, accountability, and quality.

- Measure quality to ensure that the data meets your needs.

- Design for flexibility to adapt to changes in your data and model needs.

Benefits of integrative data labeling:

- Higher quality data

- Increased throughput

- Improved scalability

- Reduced costs

- Faster time to market

-

Protect Your Data

When outsourcing data labeling, there are security risks, such as workers accessing data from insecure networks, downloading or saving data, and working in non-compliant environments.

To ensure data security, choose a data labeling service with the right training, policies, and processes in place and certifications to show their process has been reviewed. The service should also respect data like you do and have a documented data security policy in four areas: people and workforce, technology and network, facilities and workspace, and data ownership.

Security concerns shouldn't stop you from using a data labeling service, but it's important to choose one with a strong security track record.

-

Choose the Right Tools for Your Needs

Data labeling tools are essential for machine learning projects. You can either build or buy a data labeling tool, but buying is generally the best choice as it's less costly and provides user support.

When choosing a data labeling tool, consider the following:

- Your data type and use case. Tools vary in data enrichment features, QA capabilities, supported file types, data security certifications, storage options, and more. Features for labeling may include bounding boxes, polygons, masks, 2-D and 3-D points, tags, attributes, and more.

- Your organization's size and growth stage. A commercially viable tool is a good choice if you are getting started. If you are scaling the process, look for a data annotation provider that can scale training data as needed with AI-powered, automated labeling. If you are sustaining scale, seek out a data labeling company that uses active management to continuously monitor the quality of labeled data.

- Your data quality requirements. Some tools have built-in QA features, and a few managed workforce providers can provide trained workers with extensive experience with labeling tasks.

Make sure the data labeling tool is integrated with the data labeling platform. This will ensure that the workforce and platform are closely tied together, which can improve the quality of labeled data.

Beware of contract commitment implications. Ensure the vendor offers annual contracts based on relevant consumption models, workforce management, and contractual SLAs. Look for contracts that are flexible enough to allow you to scale your data labeling needs up or down as needed.

Are you ready to learn how CloudFactory can help you reach your goals?

Contact Sales

Fill out this form to speak to our team about how CloudFactory can help you reach your goals.

Frequently Asked Questions

Which companies offer the best labeling service for computer vision?

When evaluating a vendor offering data labeling services for computer vision, look for a provider with a strong track record in accuracy, scalability, domain expertise, and the ability to customize labeling workflows to meet your specific requirements. CloudFactory integrates people, processes, and technology to build an efficient lifecycle for training data by putting humans in the loop. CloudFactory data annotators are vetted, managed teams pre-trained on tools and use cases specific to your computer vision needs.

How should I label image data for machine learning?

Labeling images to train machine learning models is a critical step in supervised learning. You can use different approaches, but the people who label the data must be extremely attentive and knowledgeable on specific business rules because each mistake or inaccuracy will negatively affect dataset quality and the overall performance of your predictive model.

To achieve high accuracy without distracting internal team members from more important tasks, you should leverage a trusted partner who can provide vetted and experienced data labelers trained on your specific business requirements and invested in your desired outcomes.

How can we get training data?

The training dataset you use for your machine learning model will directly impact the quality of your predictive model, so it's extremely important that you use a dataset applicable to your AI initiative and labeled with your specific business requirements in mind.

While you could leverage one of the many open-source datasets available, your results will be biased toward the requirements used to label that data and the quality of the people labeling it. To get the best results, you should gather a dataset aligned with your business needs and work with a trusted partner who can provide a vetted and scalable team trained on your specific business requirements.

What are the techniques for labeling data in machine learning?

Data labeling requires a collection of data points such as images, video, text, or audio and a qualified team of people to tag or label each of the input points with meaningful information that will be used to train a machine learning model.

There are different techniques to label data, and the one used would depend on the specific business application, for example, bounding box, semantic segmentation, redaction, polygonal, keypoint, cuboidal, and more. Engaging with an experienced data labeling partner can ensure your dataset is labeled properly based on your requirements and industry best practices.

What is the best image labeling tool for object detection?

Many tools could help develop excellent objection detection. Quality object detection depends on optimal model performance within a well-designed software/hardware system. High-quality models need high-quality training data, which requires people (workforce), process (the annotation guidelines and workflow), and technology (labeling tool). Therefore, the image labeling tool is merely a means to an end.

How do you address complex object boundaries in segmentation labeling and ensure quality?

The partner you choose to help you with your data labeling should employ experienced labelers and comprehensive guidelines, utilize iterative review and feedback mechanisms, leverage advanced technology, and incorporate data validation processes to handle complex object boundaries effectively and ensure high-quality results in segmentation labeling tasks.

Where do geospatial systems get their training data?

Geospatial systems acquire training data from a wide array of sources, encompassing satellite and aerial imagery, open data initiatives, remote sensing instruments, crowdsourced contributions, commercial data providers, historical records, IoT sensor networks, simulated data, field surveys, and user-generated content, with the selection of data sources guided by the application's specific needs and accuracy prerequisites. Preprocessing and data quality assurance measures are often applied to ensure the reliability and consistency of the training data for geospatial models.

How do you easily label training data images?

The ingredients for high-quality training data are people (workforce), process (annotation guidelines and workflow, quality control), and technology (input data, labeling tool). An easy way to get images labeled is to partner with a managed workforce provider that can provide a vetted team trained to work within your annotation parameters.

Who are the best-managed data labeling providers?

To achieve the best outcomes for your data annotation needs, work with a partner that provides a vetted and managed workforce and an integrated, full-stack annotation solution for a seamless experience across people, process, and technology.

CloudFactory provides human-in-the-loop AI solutions, helping AI and machine learning teams accelerate their development cycles to deploy models more quickly and sustain them more efficiently.